·AI News Daily·

Today’s News Headlines

- Alibaba Cloud’s Big Move: Create a Custom AI Agent in 5 Minutes, Giving Everyone an Intelligent Assistant!

- AI Video Says Goodbye to “Flicker”? NVIDIA and Stanford Join Forces to Make AI Animations Looooonger!

- AI “Mind Reading” Upgraded! DeepSeek and Tsinghua Tackle the Reward Model Challenge, Making AI Understand You Better!

- Fire and Ice? Hong Kong Web3 Festival Opens Amid Market Volatility, Focusing on Regulation and Opportunity

01 Alibaba Cloud’s Big Move: Create a Custom AI Agent in 5 Minutes, Giving Everyone an Intelligent Assistant!

At the recent Alibaba Cloud AI Momentum Conference, Alibaba Cloud launched its MCP (Model Context Protocol) service on the “Bailian” platform, aiming to revolutionize the creation process of AI Agents. Through this service, even non-professional developers can build customized AI assistants connected to external tools and data sources in just 5 minutes, much like assembling building blocks. This significantly accelerates the “last mile” of AI moving from theory to practical application.

Core Highlights

- Rapid Construction: Using the Bailian MCP service, users can configure a dedicated AI Agent in as fast as 5 minutes without managing underlying resources or complex development deployment, significantly lowering technical barriers and time costs.

- Full-Chain Service: The Bailian platform provides a one-stop Agent development toolchain, integrating computing power, over 200 large models, and the first batch of 50+ MCP services (like AutoNavi Maps, DingTalk, Notion, etc.), covering various life and work scenarios and reducing integration friction.

- Scenario-Driven: Allows users to flexibly combine different large models and MCP services based on specific task requirements. For example, combining Tongyi Qianwen and AutoNavi Maps can create an urban life assistant capable of checking maps, weather, planning trips, recommending food, and even hailing taxis.

- Emerging Ecosystem: As of the end of January, over 290,000 enterprises and developers have called the Tongyi API, covering multiple industries such as internet, banking, and automotive, indicating a large user base. Alibaba Cloud also announced plans for an “AI Agent Store,” intending to build an Agent application store ecosystem.

Researcher’s Thoughts

- For Practitioners: The barrier to creating and deploying custom AI Agents has been significantly lowered, bringing more opportunities for SMEs and independent developers to quickly implement innovative ideas. Developers can shift their focus from underlying technology to business logic and user experience. However, the richness of the platform ecosystem and potential vendor lock-in effects should also be considered.

- For the General Public: In the future, it will be easier to access and use various “small yet beautiful,” highly customized AI assistants. These Agents can better understand specific scenario needs and provide more precise services, whether it’s improving work efficiency (automated reports) or enriching life (personalized travel planning), making everything smarter and more convenient.

Recommended Reading

- Alibaba Cloud AI Momentum Conference Official Review

- Introduction to Alibaba Cloud Bailian Platform and MCP Service

- Details of Alibaba Cloud AI Agent Store Plan

02 AI Video Says Goodbye to “Flicker”? NVIDIA and Stanford Join Forces, Making AI Animations Looooonger!

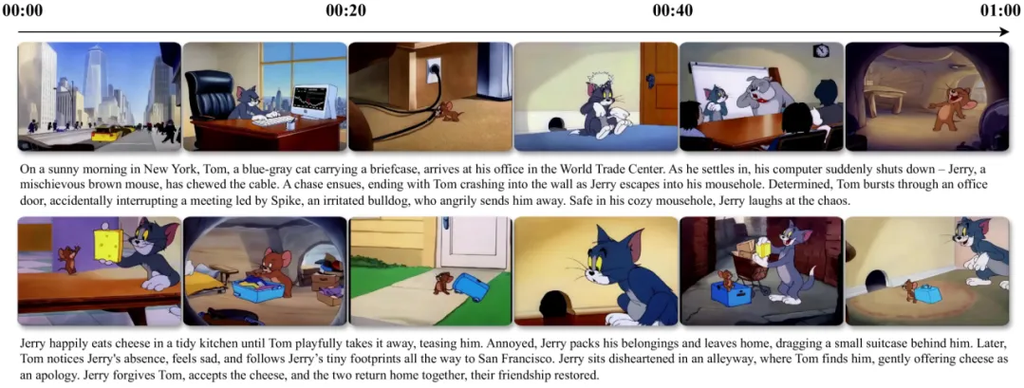

Current AI video generation commonly suffers from short duration and poor coherence. Researchers from NVIDIA, Stanford University, and other institutions have jointly proposed a new technique called “Test-Time Training” (TTT), effectively addressing this pain point. They successfully generated cartoon animations up to one minute long with coherent plots and consistent styles, signaling that AI video creation is moving from the “flicker” era towards longer narrative possibilities.

Core Highlights

- Duration Breakthrough: TTT technology enables AI models to generate video content up to one minute long while maintaining high consistency across multiple scene transitions, marking a significant step for AI video from generating clips to narrative creation.

- Memory Mechanism: The key to TTT lies in its unique handling of hidden states – these states are themselves small neural networks that can be updated in real-time (trained) during video generation (inference). This gives the model a stronger “memory” to maintain content coherence over a long time window.

- Stunning Results: The research team demonstrated this by generating a new animated story in the style of “Tom and Jerry,” complete with multiple scenes, character interactions, and dynamic movements. The story was generated directly by the model in one go without manual splicing, showcasing the technology’s potential in narrative and style consistency.

- Easy Integration: TTT is not an entirely new architecture but can be implemented by adding a TTT layer to existing pre-trained video models (like CogVideo-X) and fine-tuning. This promises the potential to leverage existing powerful base models to quickly popularize long video generation capabilities.

Researcher’s Thoughts

- For Practitioners: For professionals like animators and video creators, TTT technology could bring revolutionary changes, significantly lowering the barriers and costs of producing long-form coherent content, upgrading AI from a special effects tool to a narrative assistant. The new challenge lies in precisely controlling the creative details (plot, emotion, style) of AI-generated content.

- For the General Public: In the future, we may see more long-form video content assisted or generated by AI, such as entertainment shorts, personalized animations, educational demonstrations, etc., which will be richer and more coherent. Ordinary users might also be able to easily create longer, more complex personal videos using tools integrated with TTT. However, initial adoption might be limited by the high computational cost required for “test-time training.”

Recommended Reading

- Explanation of TTT Technology Principles

- Demonstration of TTT Generating “Tom and Jerry” Style Animation

03 AI “Mind Reading” Upgraded! DeepSeek and Tsinghua Tackle the Reward Model Challenge, Making AI Understand You Better!

In Large Language Model (LLM) training, the Reward Model (RM) acts like a “tutor,” guiding the model to align with human preferences. However, existing RMs perform poorly when dealing with “general domain” queries that have complex evaluation criteria and high subjectivity. DeepSeek Technology and Tsinghua University have collaborated to propose an innovative reward modeling method aimed at enabling AI to more accurately understand complex human intentions and optimize the quality of LLM responses.

Core Highlights

- Core Breakthrough: This research directly addresses the challenges faced by existing reward models in handling unstructured, subjective tasks like open-ended Q&A and creative writing, striving to make AI more accurately “grasp” the user’s true intent.

- Two-Pronged Approach: The new method combines two techniques: Generative Reward Modeling (GRM), which uses natural language to generate richer, interpretable reward feedback (instead of a single score); and Self-Consistent Principled Critical Tuning (SPCT), a new online training method that allows the GRM model to adaptively generate evaluation principles and perform critical analysis.

- Inference Scaling: The most innovative aspect is the validation of “inference-time scaling” effectiveness – meaning that during model usage (inference), increasing computation (e.g., through multiple sampling and result synthesis) can significantly improve the reward model’s judgment accuracy, without solely relying on increasing model size during training.

- Potential Advantages: Research indicates that for enhancing RM performance, inference-time scaling might be more effective than simply scaling up the training model size. This suggests that in the future, relatively smaller models could achieve or even surpass the performance of larger models by investing more inference computation when needed, offering a more economical and flexible path for deploying high-performance AI.

Researcher’s Thoughts

- For Practitioners: This work provides new tools for improving LLM alignment, reducing “hallucinations,” and enhancing complex reasoning capabilities. High-quality reward signals are fundamental to training more reliable AI systems. The concept of “inference-time scaling” could influence future model training, evaluation, and deployment strategies. DeepSeek plans to open-source the GRM model, which will accelerate community exploration.

- For the General Public: In the long run, this means future AI assistants, chatbots, etc., will be more “intelligent” and “considerate,” able to more accurately understand our vague or value-laden requests, providing responses that better meet expectations and are more helpful. This reduces misunderstandings in human-computer interaction and enhances the usability and safety of AI tools.

Recommended Reading

- DeepSeek / Reward Model Research

04 Fire and Ice? Hong Kong Web3 Festival Opens Amid Market Volatility, Focusing on Regulation and Opportunity

[Image]

This week, Asia’s significant crypto industry event – the 2025 Hong Kong Web3 Festival – opened against a backdrop of global financial market turmoil and a sharp pullback in cryptocurrency prices. While market sentiment outside the venue was chilly, Hong Kong officials conveyed cautious optimism about local Web3 development, emphasizing the determination to balance innovation and regulation. This highlights the complex situation of coexisting challenges and opportunities in the current Web3 space.

Core Highlights

- Market Background: Coinciding with rising global risk aversion, the price of Bitcoin dropped significantly, once again demonstrating its strong correlation with traditional risk assets and reminding the industry of the macroeconomic environment’s influence on the crypto market.

- Official Tone: Hong Kong’s Financial Secretary, Paul Chan Mo-po, reiterated support for Web3 development, emphasizing “achieving a balance between promoting development and preventing risks.” Senior executives from the Securities and Futures Commission (SFC) also actively spoke, promoting Hong Kong as a development base and introducing progress in virtual asset regulation (such as new guidelines for Staking).

- Policy Focus: Discussions at the conference focused on future US crypto policies (Trump’s stance seen as potentially positive) and Hong Kong’s own regulatory improvement plans (introducing stablecoin legislation, regulating OTC and custody within the year).

- Industry Dynamics: Binance co-founder He Yi participated via video, and Ethereum founder Vitalik Buterin made a surprise appearance. Key figures remain active despite regulatory pressure, showcasing industry resilience. He Yi encouraged Chinese entrepreneurs to maintain confidence.

Researcher’s Thoughts

- For Practitioners: The Hong Kong Web3 Festival showcased the dual reality of the industry: technological exploration and regulatory frameworks (especially in Hong Kong) are becoming clearer, but macroeconomic factors, geopolitics, and policy uncertainties have far-reaching impacts. Companies need to strengthen risk management, pay attention to global regulations, and potentially consider establishing a presence in regions with relatively stable expectations like Hong Kong.

- For the General Public / Investors: Recent market volatility serves as a warning that cryptocurrencies are high-risk investments with increasing correlation to traditional financial markets; one should not blindly believe the “safe haven” myth. While the regulated development of Web3 in places like Hong Kong may bring opportunities, caution is still necessary, paying attention to market and regulatory risks.

Recommended Reading

- Hong Kong Web3 Festival 2025 Official Information

Today’s Summary

The AI field is experiencing a significant wave of “efficiency enhancement” and “quality improvement”:

- Development Barriers Significantly Lowered: Alibaba Cloud’s “Bailian” platform makes building customized AI Agents accessible, promising completion in just 5 minutes, indicating that the popularization of AI applications will further accelerate.

- Core Capabilities Continue to Break Through: Technology from NVIDIA and Stanford promises to free AI video from “flicker” limitations, enabling long-duration, highly coherent content generation. Meanwhile, innovations in reward models by DeepSeek and Tsinghua aim to make AI more accurately understand and align with complex human intentions and preferences, enhancing AI’s “emotional intelligence” and reliability.

At the same time, the implementation of cutting-edge technologies still needs to face market and regulatory tests:

- Web3 Industry’s “Fire and Ice”: The Hong Kong Web3 Festival, held amidst market downturn pressure, clearly reflects that even with policy support and innovative enthusiasm, macroeconomic fluctuations and the gradual improvement of regulatory frameworks are cycles the industry must navigate.

About Us

🚀 Leading enterprise digital transformation, shaping the future of the industry together. We specialize in creating customized digital systems integrated with AI, achieving intelligent upgrades and deep integration of business processes. Relying on a core team with top technology backgrounds from MIT, Microsoft, etc., we help you build powerful AI-driven infrastructure, enhance efficiency, drive innovation, and achieve industry leadership.