AI News Daily

Shopify AI First / Gemini Visual Interaction / NVIDIA Efficient Model

■ ■ ■

AI News Letter

○

📌 Table of Contents:

🛒 Shopify’s New Rule: Hiring? First Ask if AI Can Do the Job

👀 Google Upgrades Gemini Live: Giving Phones “Eyes and Ears,” Real-time Visual Interaction Lands

🚀 NVIDIA Open-Sources Nemotron Ultra: 253B Parameters Challenge Giants, Inference Efficiency Soars 4x

💎 Bulgari Partners with Vision Pro: Opening a New Chapter in Immersive Digital Experiences for High Jewelry

🗣️ ElevenLabs Releases MCP Server: Connecting AI Voice Interaction, Empowering Outbound Calls and Content Creation

PART 01

🛒 Shopify’s New Rule: Hiring? First Ask if AI Can Do the Job

What’s the Highlight?

E-commerce giant Shopify is implementing a noteworthy “AI First” internal policy. CEO Tobi Lütke requires that before any team requests additional personnel or resources, they must first demonstrate why the relevant tasks cannot be accomplished by AI. This policy not only reshapes the recruitment process but also incorporates AI application capabilities into employee performance evaluations, clearly elevating AI from an auxiliary tool to a fundamental operational capability of the company, aiming to comprehensively enhance efficiency and innovation potential.

Core Points:

- Mandatory Requirement: The company sets “using AI reflexively” as a basic expectation, applicable to all employees from the CEO down, emphasizing that failing to learn AI will lead to stagnation.

- Hiring Link: Before applying for increased headcount, the possibility of AI solutions must be prioritized, evaluated, and ruled out, treating AI as a potential “virtual employee.”

- Performance Integration: AI application ability, willingness to learn, and knowledge sharing will be incorporated into the formal employee performance evaluation and peer review systems.

- Tool Empowerment: The company commits to providing cutting-edge AI tools (like GitHub Copilot, Claude, proprietary chat.shopify.io, etc.) and vigorously developing internal AI functions (like the Sidekick assistant, Shopify Magic suite), ensuring the feasibility of the AI First strategy.

What Can Practitioners Consider?

- For Practitioners: A clear warning that AI capability is shifting from a “bonus” to a “necessity.” Future work focus may shift from execution to using AI for strategic thinking and innovation. Managers need to change their mindset, prioritize AI solutions, and assess team AI literacy.

- For Ordinary People/Industry: Shopify’s move might trigger an industry demonstration effect, accelerating AI integration in business scenarios. Simultaneously, it intensifies concerns about AI replacing jobs. This reflects the universal challenge businesses face in the AI wave: balancing technological efficiency with human capital development. This move could be a pragmatic measure for cost reduction and efficiency improvement, or a strategic layout for the future, aiming to validate and promote its own AI products through internal practice (Dogfooding). However, it might also exacerbate the “AI literacy gap.” In the long run, this could drive hiring demand towards advanced positions requiring unique human judgment and creativity.

Access Method:

PART 02

👀 Google Upgrades Gemini Live: Giving Phones “Eyes and Ears,” Real-time Visual Interaction Lands

PART.01

What’s the Highlight?

Google has begun rolling out a major update for its AI assistant interface, Gemini Live, integrating the core visual capabilities of Project Astra. Users can now share what they see in real-time through their phone camera or share their phone screen content, allowing the Gemini AI to “see” and understand visual information for real-time multimodal voice conversations. This feature makes AI assistant interaction more natural and context-aware, and is currently rolling out to select Gemini Advanced paid users on Android devices.

Core Points:

- Real-time Vision: Gemini Live adds two major visual inputs: capturing real-time video streams via the phone camera to perceive the physical world; real-time sharing of phone screen content to understand digital operations. This is the first large-scale implementation of Project Astra technology.

- Multimodal Interaction: Users can conduct voice conversations while displaying visual information. Gemini can fuse and process visual and auditory information to understand user intent and respond, such as pointing at objects to ask questions or requesting summaries of screen content.

- Gradual Rollout: Starting late March 2025, the feature is being rolled out in phases, prioritizing Gemini Advanced subscribers on Android platforms (like Pixel 9, Galaxy S25 series), with the goal of covering a wider range of Android devices.

- User Experience: Initial feedback indicates an intuitive interface and smooth interaction, but issues exist with close-range focus, automatic lens switching, and lack of manual zoom support. Screen sharing interaction feels slightly weaker. AI understanding occasionally suffers from “hallucinations,” requiring users to employ critical judgment.

PART.02

What Can Practitioners Consider?

- For Practitioners: Opens new doors for developers to create applications like AR assistance, interactive education, intelligent visual search, and automated UI testing. This is a significant step towards more natural, low-friction human-computer interaction. However, it also brings severe privacy and security challenges, demanding high attention to data protection.

- For Ordinary People/Industry: Makes AI assistants “smarter,” capable of providing more immediate and practical help in daily life (e.g., identifying objects, translating menus). Google’s lead in multimodal interaction may spur the industry to accelerate R&D. Initial tying to high-end devices and paid services could drive hardware sales and subscription growth, strengthening the Android ecosystem. This move reflects Google’s core strategy of rapidly integrating cutting-edge AI into existing products and business ecosystems, but also highlights the technical challenges of real-time multimodal processing. This is a crucial step for Google in the field of “embodied intelligence,” potentially expanding to smart glasses, robots, and more devices in the future.

Related Link 🔗:

https://blog.google/technology/ai/google-ai-updates-march-2025

PART 03

🚀 NVIDIA Open-Sources Nemotron Ultra: 253B Parameters Challenge Giants, Inference Efficiency Soars 4x

PART.01

What’s the Highlight?

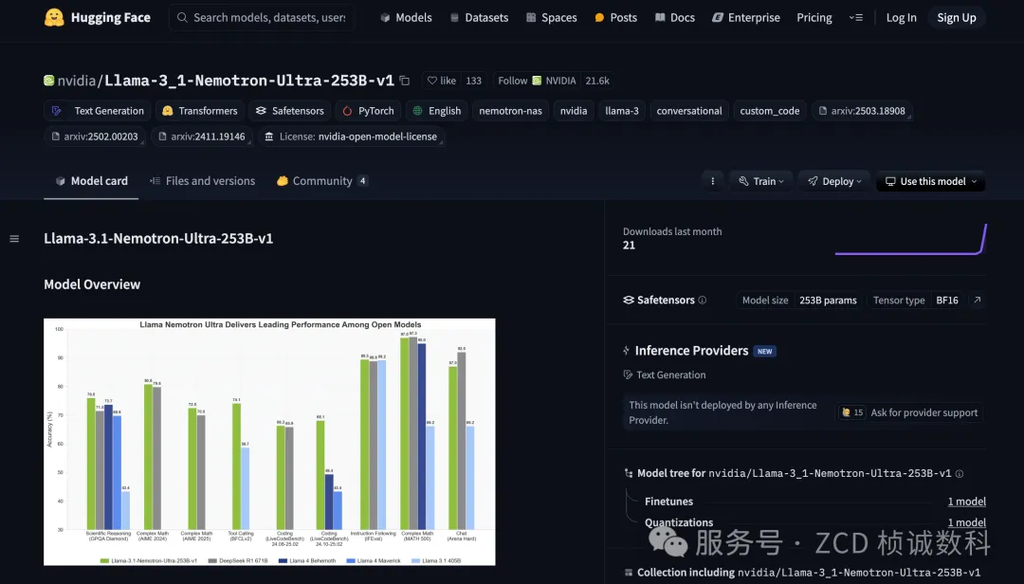

Chip giant NVIDIA has open-sourced Llama-3.1-Nemotron-Ultra-253B-v1, a large model optimized from Meta’s Llama-3.1-405B, on Hugging Face. Using techniques like Neural Architecture Search (NAS), the model compresses parameters down to 253 billion yet demonstrates performance comparable or superior to larger models (like DeepSeek R1 671B) on several benchmarks, notably achieving a 4x increase in inference throughput. The model supports a 128K context window, runs efficiently on a single node with 8x H100 GPUs, and is open for commercial use.

Core Points:

- Efficient Performance: At a 253B parameter scale, it performs excellently on benchmarks like GPQA (graduate-level Q&A), IFEval (instruction following), and LiveCodeBench (coding), partially surpassing the 671B DeepSeek R1, achieving an outstanding performance-to-efficiency ratio.

- Architecture Optimization: Applied NAS techniques, introduced non-standard model blocks (like skipping attention computation, variable FFN layers, FFN fusion), significantly reducing memory and computation requirements, while maintaining high-quality output through knowledge distillation and continued pre-training.

- Inference Acceleration: Thanks to parameter reduction and architectural optimization, inference throughput is boosted 4x compared to DeepSeek R1 671B, implying lower operational costs and faster response times.

- Open for Commercial Use: Model weights and some post-training data are publicly available on Hugging Face under an open model license, permitting commercial application. Designed with deployment efficiency in mind, optimized for H100 and future B100 GPUs.

PART.02

What Can Practitioners Consider?

- For Practitioners: Challenges the “bigger parameters are always better” notion, proving that mid-sized models can achieve top-tier performance and high efficiency through optimization, offering new options for those with limited resources. Its powerful capabilities make it an ideal open-source foundation model for building chatbots, AI Agents, RAG applications, etc. Open commercial use lowers the barrier to entry. Optimization techniques (NAS, etc.) provide valuable experience for the field.

- For Ordinary People/Industry: Open access to high-performance models lowers the innovation threshold, promoting AI application popularization. The model’s optimization for NVIDIA GPUs will reinforce its hardware market leadership. By optimizing top open-source models and contributing back to the community, NVIDIA participates in model competition in a new way, potentially disrupting the open-source landscape. This move is a carefully planned “hardware-software synergy” strategy, enhancing the attractiveness of NVIDIA hardware. The model’s efficiency might push the industry to focus more on efficiency metrics, driving AI towards more practical applications. Optimizing based on Llama is a pragmatic and efficient strategy, quickly leveraging the ecosystem foundation and reducing R&D risks.

Related Link:

https://huggingface.co/nvidia/Llama-3_1-Nemotron-Ultra-253B-v1

PART 04

💎 Bulgari Partners with Vision Pro: Opening a New Chapter in Immersive Digital Experiences for High Jewelry

PART.01

What’s the Highlight?

Italian luxury jewelry brand Bulgari (BVLGARI) has launched the “Bvlgari Infinito” app in the Apple Vision Pro App Store, becoming one of the first brands to leverage Apple’s spatial computing platform to offer an immersive high jewelry experience. Utilizing Vision Pro’s 3D visualization, real-time rendering, and other technologies, the app allows users to explore Bulgari’s iconic jewelry (the first chapter focuses on the Serpenti collection) up close in an unprecedented way, combined with digital artworks, redefining the digital presentation and interaction of luxury goods.

Core Points:

- Luxury Debut: Bulgari takes the lead by launching on the Vision Pro platform, demonstrating its commitment to embracing digital innovation and exploring the future of luxury experiences, setting an industry trend.

- Spatial Computing: Fully leverages Vision Pro’s technological advantages (spatial computing, high-resolution 3D visuals, real-time rendering, dynamic storytelling) to enable virtual “touching” and multi-angle observation of jewelry details, offering an immersive experience beyond traditional media.

- Serpenti Chapter: The initial content focuses on the brand’s iconic Serpenti collection, coinciding with the Chinese Lunar Year of the Snake and an offline special exhibition of the same name, allowing users to delve deep into the design and craftsmanship of high jewelry necklaces.

- Art & Technology: Includes digital artworks created for the brand by digital artist Refik Anadol and behind-the-scenes stories, reflecting the exploration of integrating traditional jewelry art with cutting-edge digital technology.

PART.02

What Can Practitioners Consider?

- For Practitioners (Luxury/Tech): Vision Pro opens up a new frontier for luxury brands in immersive storytelling and product presentation, enabling more vivid communication of brand value, compensating for online experience shortcomings, and enhancing emotional connection. Early practices by brands like Bulgari provide reference cases for the industry, showcasing different strategies like focusing on brand stories (Bulgari/Gucci) or immersive shopping (Mytheresa).

- For Ordinary People/Consumers: Offers a rare opportunity to engage with and appreciate high jewelry art immersively, learning about design stories and craftsmanship. It foreshadows future virtual shopping formats: highly realistic product interaction, perhaps even virtual “try-ons.” Enriches the Vision Pro’s non-gaming/productivity app ecosystem, increasing the device’s appeal to specific demographics. Bulgari’s choice of Vision Pro is deliberate; the platform’s high-end tech positioning aligns with the brand, reaching core target audiences and reinforcing its innovative image. The app’s focus on brand narrative rather than sales aligns with luxury brands’ initial strategies on emerging media. Combining the online app with offline exhibitions and cultural moments creates integrated marketing, boosting influence.

Related Link:

PART 05

🗣️ ElevenLabs Releases MCP Server: Connecting AI Voice Interaction, Empowering Outbound Calls and Content Creation

PART.01

What’s the Highlight?

Voice AI unicorn ElevenLabs has released its official MCP (Model Context Protocol) server. MCP is an open protocol designed to standardize interactions between AI models and external tools. Through this server, MCP-supported AI assistants (like Claude) can directly and seamlessly invoke ElevenLabs’ full suite of AI audio capabilities, including Text-to-Speech (TTS), Speech-to-Text (STT), voice cloning, voice design, and even AI phone calling. This significantly lowers the barrier to building complex voice interaction applications, especially benefiting AI phone outbound calling and AI content creation scenarios.

Core Points:

- Protocol Bridge: The official MCP server acts as a standardized “bridge,” connecting powerful AI models (like Claude) with ElevenLabs’ specialized voice capabilities for efficient collaboration.

- Capability Access: Developers can use natural language commands to have AI assistants call upon ElevenLabs’ entire core voice technology suite (TTS, STT, voice cloning, voice design, conversational AI, AI outbound calling).

- Simplified Integration: Provides a unified, standardized interface. Developers don’t need to write extensive custom code; interaction happens via the MCP protocol, greatly simplifying the integration process and reducing development costs.

- Scenario Empowerment: Directly catalyzes potential in two major areas: AI phone outbound calling (building customized AI customer service/sales agents using realistic voice cloning) and AI content creation (automating the generation of high-quality narration/voiceovers, enabling content production automation).

PART.02

What Can Practitioners Consider?

- For Practitioners (Developers/Content Creators): Developers can quickly integrate top-tier voice capabilities using simple protocols or even natural language, accelerating development. Combining LLMs with realistic voice allows for creating unprecedented intelligent natural interaction experiences (e.g., AI making restaurant reservations). For content creators, it’s a revolutionary tool for automating narration/voiceovers, reducing costs and increasing efficiency.

- For Ordinary People/Industry: The popularization of the MCP standard and the emergence of key components signal that more powerful AI Agents with more natural interactions will accelerate development and enter daily life. Future ordinary users might be able to build complex AI services. As an open standard, MCP helps break down tool silos, promotes interoperability, sparks innovation, and fosters a thriving AI ecosystem. This move by ElevenLabs is a strategic evolution, shifting from a technology provider to a key enabler in the AI Agent ecosystem, solidifying its leading position. The rise of the MCP ecosystem suggests AI development will become more “modular,” allowing developers to combine different capability modules to rapidly build complex systems. However, technological progress also brings challenges: potential disruption to traditional call centers, voice acting jobs, etc.; the ease of use of voice cloning (barrier lowered to zero) could be misused, raising ethical and societal issues, urgently requiring robust regulations and oversight.

Related Link:

https://github.com/elevenlabs/elevenlabs-mcp

AI Tech News Daily Summary

Analyzing recent developments in the AI field reveals several clear trends: AI is moving from an auxiliary tool to being deeply integrated into core business processes; multimodal interaction is becoming key to the evolution of AI assistants; model efficiency and openness are gaining more attention; meanwhile, AI technology is rapidly penetrating various vertical sectors and catalyzing innovation. Together, these paint a picture of an accelerating AI implementation, reshaping business rules and human-computer interaction paradigms, requiring businesses and individuals to adapt proactively, enhance AI literacy, and pay attention to both opportunities and challenges.

ZC Digitals

🚀 Leading enterprise digital transformation, shaping the future of industries together. We specialize in creating customized digital systems integrated with AI, achieving intelligent upgrades and deep integration of business processes. Relying on a core team with top tech backgrounds from MIT, Microsoft, etc., we help you build powerful AI-driven infrastructure, improve efficiency, drive innovation, and achieve industry leadership.