Today’s News Highlights

🔬 FutureHouse Launches Superhuman AI Research Agent Platform, Reshaping the Paradigm of Scientific Discovery

🗣️ Tavus Introduces CVI: Enabling AI with Real-time Video Interaction Capabilities to “Read Faces and Understand Emotions”

🩺 Google AMIE “Sees Clearly”: Does Multimodal AI Diagnostic Capability Surpass Human Doctors?

🦉 Duolingo Fully Embraces AI: Efficiency Gains and Workforce Adjustments Go Hand in Hand

01 🔬 FutureHouse Launches AI Research Agent Platform, Reshaping the Paradigm of Scientific Discovery

FutureHouse, a non-profit research institution backed by former Google CEO Eric Schmidt, recently officially launched its highly anticipated AI agent platform. This platform aims to address the significant challenges currently facing the scientific research field: how to effectively process and utilize the vast amounts of information such as the 38 million papers in the PubMed database and over 500,000 clinical trials. The FutureHouse platform offers advanced functionalities like literature retrieval, in-depth review, and chemical experiment planning by releasing four “super-intelligent” AI agents specifically designed for scientific research (Crow, Falcon, Owl, Phoenix). Notably, these agents demonstrated literature processing capabilities surpassing those of PhD researchers in benchmark tests, marking a new stage in AI-assisted scientific discovery. The platform is dedicated to making these advanced tools accessible to researchers worldwide.

Key Highlights

Research Pain Points: The platform directly addresses the massive data challenge faced by the scientific community, where existing literature and clinical data make information integration extremely difficult, limiting research efficiency. Platform Launch: The FutureHouse platform is officially online, providing an easy-to-use web interface and powerful API, with the goal of making advanced AI research agents readily available to global researchers. Four Agents: The first batch includes four specialized AI agents: Crow (general Q&A), Falcon (in-depth literature review), Owl (research novelty check), and Phoenix (chemical experiment planning, experimental).

Superhuman Performance: Through rigorous benchmark testing, Crow, Falcon, and Owl outperformed PhD-level researchers and top traditional search tools in key literature retrieval and information synthesis tasks in terms of precision and accuracy. Science-Tailored: These agents are built from the ground up specifically for scientific research, with access to a vast amount of high-quality open-access papers, specialized databases, and tools, and can evaluate sources and methods like experienced researchers.

Value Insights For Practitioners: Provides researchers with powerful tools that can significantly accelerate literature analysis, hypothesis verification, and experimental design, shortening research cycles and improving efficiency. Through the API interface, customized automated research workflows can be built, such as real-time literature monitoring and large-scale data correlation analysis, fostering new research paradigms. Transparent reasoning mechanisms help understand the sources of AI conclusions, enhance trust, and may even inspire new ideas when examining AI reasoning paths. Its non-profit nature may lead to a greater focus on basic science and the development of universally beneficial tools, aligning more with the public interest of the scientific community, with the long-term goal of building autonomous “AI scientists.”

For the General Public: In the long term, by accelerating scientific research in key areas such as disease treatment and climate change, it is expected to indirectly bring about significant breakthroughs that improve quality of life. Improving research efficiency and accessibility may theoretically reduce research costs, allowing the benefits of scientific progress to reach society faster and more widely. Recommended Reading FutureHouse Official Website

02 🗣️ Tavus Launches CVI: Enabling AI to “Read Faces and Understand Emotions” Real-time Video Interaction Capabilities

Tavus, a company specializing in AI video technology, recently upgraded its core product, the Conversational Video Interface (CVI). The new version of CVI aims to create AI video agents with “emotional intelligence,” enabling real-time, natural, and highly human-like interactions. This is made possible by a series of new AI models developed by Tavus: Phoenix-3 is responsible for hyper-realistic facial rendering and expression generation, Raven-0 performs real-time visual perception (interpreting user emotions and non-verbal cues), and Sparrow-0 ensures natural dialogue flow. These models work collaboratively, allowing AI to not only “understand” speech but also “see” and comprehend users’ facial expressions, eye contact, gestures, and emotional tone in real-time video calls, thereby making more appropriate and empathetic responses. Tavus claims that CVI can achieve end-to-end response latency of less than 600 milliseconds and provides an API for easy integration.

Key Highlights

Core Technology: CVI is positioned as an end-to-end solution for creating real-time, multimodal (audiovisual) AI video conversation experiences, with the goal of making AI communicate like real people.

Emotional Intelligence: The new generation of CVI emphasizes AI’s emotional understanding and response. Through models like Raven-0, AI can analyze user emotions, engagement, and other visual cues in real time and adjust its own expressions.

Core Models: Relies on three key models: Phoenix-3 (realistic digital human appearance and expressions), Raven-0 (visual perception of non-verbal information), and Sparrow-0 (dialogue flow control and natural turn-taking). Ultra-Low Latency: Promises industry-leading low latency, with a typical round-trip time from user speech ending to AI response of about 600 milliseconds, ensuring smooth and natural conversations.

Realistic Presentation: Phoenix-3 strives for “hyper-realistic” rendering, ensuring that the AI avatar’s facial expressions can reflect complex emotions and dialogue states, rather than simple lip synchronization. Value Insights For Practitioners: Provides AI application developers with powerful tools to create next-generation interactive applications that go far beyond traditional text/voice chatbots, especially improving user engagement in customer service, education, and marketing. Presents new challenges and opportunities for UX/UI designers: how to design interaction processes and interfaces with realistic AI agents that can perceive and express emotions, ensuring user comfort and trust. Companies can leverage CVI to deploy highly human-like AI agents to handle tasks in a scalable manner, potentially reducing costs and increasing efficiency, but need to evaluate cost-effectiveness, technological maturity, and user acceptance. The technology direction demonstrates the trend of human-computer interaction evolving towards multimodal and embodied interaction, aiming to simulate the full information bandwidth of human communication and improve communication effectiveness in complex scenarios. Ethical issues are worth considering: Highly realistic “emotional intelligence” may blur the lines between humans and machines, raising concerns about trust, manipulation risks, and emotional dependence, especially in sensitive scenarios such as mental health, where the boundaries and impact of simulated empathy need careful evaluation.

For the General Public: May interact with more human-like AI in the future (such as shopping guides and virtual tutors), making the interaction experience more natural and immersive. Needs to improve the ability to distinguish between real humans and highly simulated AI, especially in scenarios involving important information acquisition, decision-making, or interpersonal relationships, to prevent misleading information or fraud. Recommended Reading Tavus Official Website

03 🩺 Google AMIE “Sees Clearly”: Does Multimodal AI Diagnostic Capability Surpass Human Doctors?

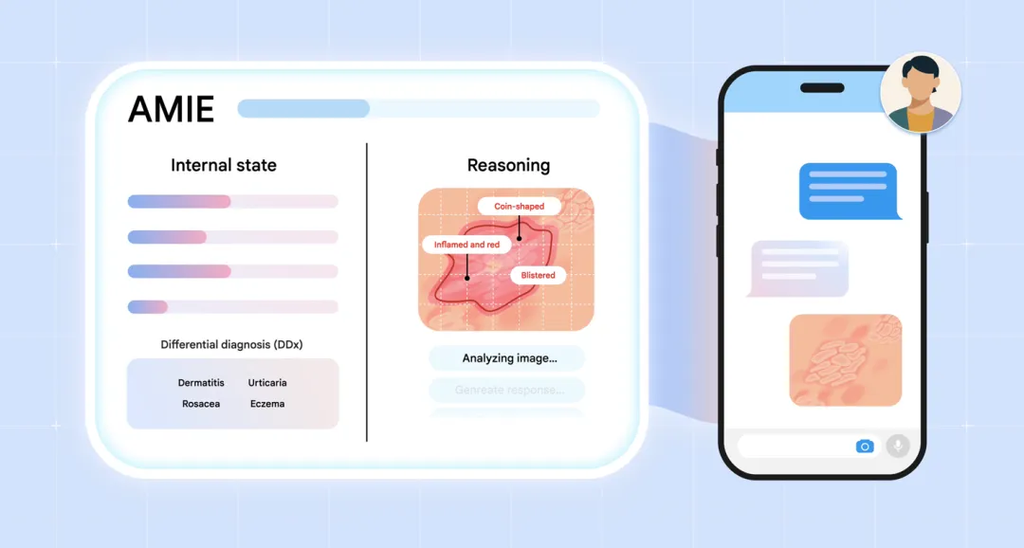

Google recently announced significant progress in its medical AI research system AMIE: successfully enabling it to understand and integrate visual medical information (such as skin photos, X-rays, and electrocardiograms). This means AMIE can “understand” image data during diagnostic conversations. This is based on Google’s powerful Gemini model and an innovative “state-aware reasoning framework” that simulates doctor thinking, intelligently determining when to request visual information, interpreting it, and incorporating it into diagnostic reasoning during text chats with simulated patients. In a simulated study based on OSCE principles, involving 105 clinical scenarios, actors playing patients interacted with multimodal AMIE or human primary care physicians (PCPs). The results showed that AMIE’s performance reached or even surpassed that of the participating human PCPs in several key indicators, including diagnostic accuracy (especially in interpreting multimodal data), appropriateness of management plans, clinical judgment, and communication skills (including empathy).

Key Highlights

Multimodal Integration: AMIE has been upgraded from a pure text system to one that can directly process and understand visual information (skin images, X-rays, ECGs, etc.) in clinical conversations.

Core Technology: Powered by Google’s advanced Gemini multimodal large model (the study mainly used Gemini 2.0 Flash) and a specially designed “state-aware reasoning framework.”

Reasoning Framework: Gives AMIE the ability to dynamically adjust its dialogue strategy, track understanding of the condition, diagnostic hypotheses, and uncertainties, intelligently switch stages, and request information (including images) at appropriate times.

Simulated Evaluation: A rigorous OSCE format was adopted, building a simulated environment with a real medical image database, allowing actors playing patients to have remote text consultations with AMIE or PCPs. Performance Superiority: In the simulated environment, AMIE performed excellently in multiple clinical dimensions, especially in diagnosing by integrating visual information, where its accuracy and completeness significantly outperformed PCPs, and its management plan and clinical judgment scores were also higher.

Empathy Performance: Remarkably, the actors playing patients generally felt that AMIE demonstrated empathy and clarity of communication in text chats that were even better than human doctors.

Value Insights For Practitioners: Demonstrates the potential of AI as a powerful clinical assistant tool that can help doctors efficiently process information, provide diagnostic ideas, and assist in interpreting data, especially beneficial for resource-limited areas or telemedicine. AMIE’s multimodal integration strategy and state-aware reasoning framework provide a technological paradigm for building more intelligent clinical decision support systems; its simulated OSCE evaluation method is also worth learning from. Medical institutions need to pay attention to such technological trends and consider how to safely and effectively integrate them into clinical workflows, involving complex issues such as technology, ethics, law, training, and patient acceptance. AMIE’s unexpectedly high “empathy” score challenges the stereotype of AI, but also raises questions about the nature of its “empathy” (pattern learning vs. genuine emotion), requiring more careful evaluation of AI’s soft skills. The project’s progress may exacerbate the polarization of attitudes towards AI in the medical community (huge potential vs. anxiety about replacement, privacy concerns, and questions about reliability), making the development of reasonable regulatory frameworks and ethical guidelines more urgent. For the General Public: May be able to obtain convenient and accurate preliminary health consultations assisted by AI through telemedicine in the future, especially in non-emergency situations or for second opinions. Needs to understand that AI in the foreseeable future will assist doctors rather than completely replace them, and the final decision will still be the responsibility of human doctors. The protection of personal health data in AI model training and applications will be a core privacy issue that the public needs to continuously focus. Recommended Reading Google Research Blog (AMIE Visual Capabilities)

04 🦉 Duolingo Fully Embraces AI: Efficiency Gains and Workforce Adjustments Go Hand in Hand

Duolingo, a well-known language learning platform, officially announced a shift to an “AI-first” strategy through an internal memo from CEO Luis von Ahn (later shared publicly). The core goal is to leverage AI to massively improve the efficiency of language teaching content creation and overall operational scale. To this end, the company will implement structural changes, most notably “gradually ceasing to use (human) contractors to complete tasks that AI can handle” (such as content generation and translation), and incorporating AI proficiency into employee performance and recruitment considerations. Before requesting an increase in headcount internally, it must first be proven that the work cannot be automated. Von Ahn emphasized that this move aims to empower full-time employees, allowing them to focus on more creative work, and the company will provide training support. As an initial result, Duolingo announced that it has created 148 new language courses with the help of AI within a year. Key Highlights Strategic Core: Duolingo establishes “AI-first” as a company-wide strategy, with AI deeply integrated into and reshaping core operations and workflows. Human Resources Policy: AI application capabilities will become an important criterion for new employee recruitment and the performance evaluation of existing employees; headcount increases require prior proof of automation infeasibility. Efficiency Driven: The primary motivation is to significantly increase the speed and scale of content production to meet rapid development needs and overcome the bottlenecks of time-consuming traditional manual methods. Employee Positioning: Management states that AI is intended to empower rather than replace full-time employees, optimizing processes to allow them to focus on high-value creative work, with training support provided. Researcher Thoughts For Practitioners: A warning for content creators/translators/contractors: AI poses a direct replacement risk to certain knowledge-based and creative (especially patterned) positions, requiring them to upgrade skills towards areas difficult for AI to replicate or learn human-machine collaboration. A case study for business managers/HR: Demonstrates how to systematically integrate AI, adjust organizational structure, HR policies, and performance systems. When learning from this, it is necessary to weigh efficiency gains against employee morale, skills transformation, and content quality control. For Practitioners in the EdTech Field: Highlights the potential of AI in scaled content production, but also raises a warning bell, emphasizing the need to focus on the accuracy and effectiveness of AI-generated content and avoid sacrificing quality. Reflects management philosophy and cost control logic: prioritizing automation over hiring, and prioritizing the reduction of contract workers, suggesting a strong motivation to optimize labor costs (especially variable costs) using AI. Challenges faced: how to maintain brand characteristics (such as fun and humanized style) and a high-quality user experience amidst the wave of automation, and avoid content homogenization, dryness, or errors that damage user stickiness and reputation. For the General Public: May gain access to more diverse learning resources covering a wider range of less common languages, increasing learning opportunities. Needs to maintain discernment regarding the quality of AI-generated educational content, focusing on accuracy, practicality, and engagement, and seeking supplementary resources when necessary. A microcosm of AI reshaping the employment market structure (affecting knowledge-based/creative positions), requiring attention to its long-term socioeconomic impact. Recommended Reading Duolingo CEO’s Open Letter

Today’s Summary

Today’s developments in the field of artificial intelligence are diverse, showcasing both the enormous potential brought by technological breakthroughs and the real-world challenges and ethical reflections that accompany its integration into society. At the forefront of research, FutureHouse has launched a “superhuman” AI agent platform specifically designed for scientific research, with its ability to process massive amounts of literature and assist in experiments foreshadowing a transformation in the paradigm of scientific discovery. In the healthcare sector, Google’s AMIE system has integrated visual information and demonstrated performance surpassing human doctors in simulated diagnostic conversations, particularly in diagnostic accuracy and empathetic expression, painting a picture of the future of AI-assisted diagnosis and treatment, while also sparking discussions about the nature and reliability of its “empathy.”

However, the social integration of AI is not without its challenges. The controversy surrounding the AI avatar appearing in a New York court has brought the issues of transparency, accountability, and ethical boundaries in legal tech applications to public attention, highlighting the tension between existing legal frameworks and new technologies. At the level of commercial application, Duolingo’s aggressive implementation of an “AI-first” strategy, utilizing AI to improve efficiency and reduce contract worker positions, reflects the desire of companies to pursue scale and cost optimization through AI, while also illustrating the impact of AI on the job market and the challenges of balancing efficiency with quality, and automation with humanization. Furthermore, Tavus’s release of the emotionally intelligent conversational video interface (CVI), which enables AI to have real-time interactive capabilities to “read faces and understand emotions,” represents the evolution of human-computer interaction towards a more natural and emotionally rich dimension, but also brings new considerations regarding simulation, trust, and ethics.

Overall, AI is penetrating fields such as scientific research, healthcare, law, education, and human-computer interaction at an unprecedented speed and depth. While it demonstrates significant value in improving efficiency and expanding the boundaries of capabilities, it also continues to pose major issues to society that urgently need to be addressed, including ethical norms, legal regulation, employment structure adjustments, and the reshaping of human-machine relationships.