AI News Daily

■ ■ ■

AI News Letter

○ ○

📌 Table of Contents:

- 🤖 Llama 4 Launch Controversy: Performance Issues & “Benchmark Cheating” Allegations?

- 🚀 OpenAI CEO Altman: Programmer Productivity Could Increase 10x Within a Year.

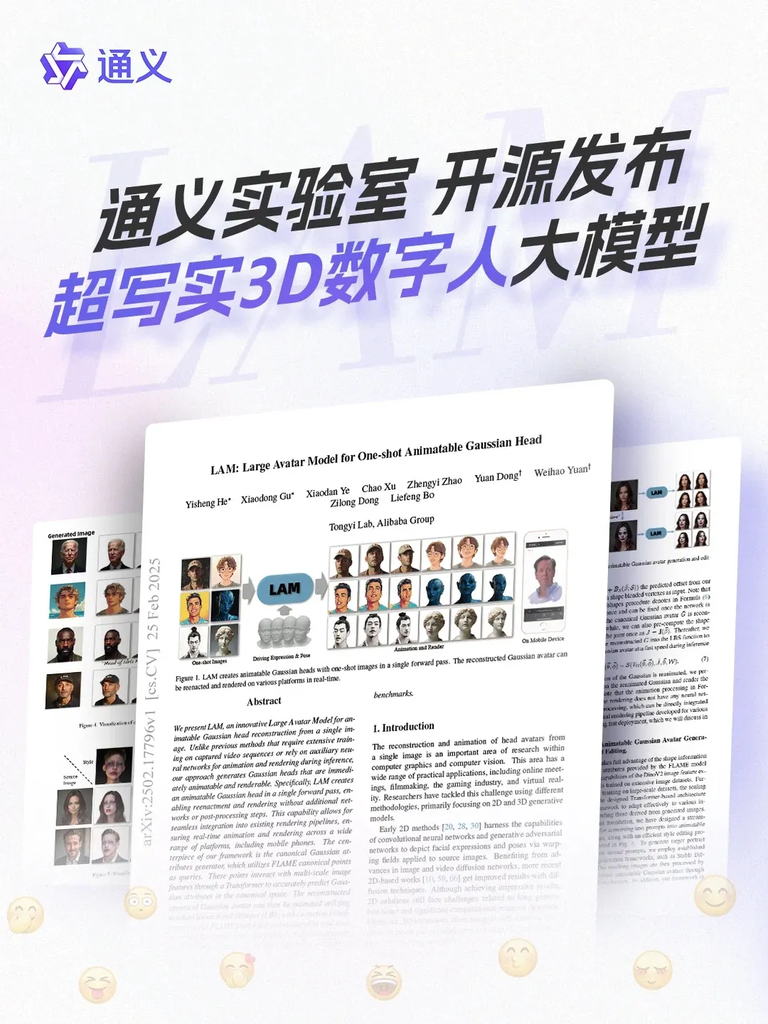

- 👤 Tongyi Open-Sources “Single Image to Person” Model: Generates Hyper-realistic 3D Digital Humans in Seconds.

- ⏳ GPT-5 Release Delayed! OpenAI CEO: o3/o4-mini Models Coming First.

- ⚖️ OpenAI Updates: Deep Research Feature Opening to Free Users Amidst Legal Challenges.

- ⚡ Anthropic Chief Scientist: Claude 4 Likely Within Six Months; AI Iteration Outpacing Hardware Cycles!

- 🎮 Mihoyo Founder Cai Haoyu’s New AI Game “Whispers From The Star” Gameplay Revealed.

PART 01: Llama 4 Launch Mired in Controversy: Performance Issues & “Benchmark Cheating” Allegations?

Highlights:

Meta’s highly anticipated Llama 4 model, touted as a native multimodal Mixture of Experts (MoE) with impressive parameters aimed at dominating the open-source space against competitors like DeepSeek V3, faced significant backlash upon release. Community testing revealed widespread performance issues, particularly in coding and long-context tasks, falling far short of advertised capabilities and competing models. More seriously, anonymous sources alleged Meta might have included benchmark datasets in the training data to meet deadlines, suggesting potential “benchmark cheating” Although Meta AI executives and involved researchers (like Licheng Yu) denied these accusations, emphasizing that application deployment optimization takes time, the incident has caused a major stir, shaking Llama’s reputation as an open-source leader.

- Underwhelming Performance: Community users reported that Llama 4 (including the 402B Maverick and 109B Scout variants) performed poorly in coding tasks, comparable only to Qwen-32B or Grok-2/Ernie 4.5. Its aider coding benchmark score was a mere 16%, ranking near the bottom. Long-context processing was also criticized as “all show and no go,” with recall rates below 60% even at 1K context, significantly worse than the Gemini series.

- Cheating Allegations: A post on the “1Point3Acres” forum claimed Llama 4 didn’t achieve state-of-the-art (SOTA) results. To meet the release deadline, leadership allegedly suggested mixing benchmark test sets into the post-training phase to inflate scores. The whistleblower claimed to have resigned over this and requested removal from the technical report, also linking Meta AI research head Joelle Pineau’s departure to the issue.

- Official Denial: Meta AI VP Ahmad Al-Dahle and Research Scientist Manager Licheng Yu strongly denied the “cheating” claims, stating performance discrepancies were due to the time needed for stabilizing application implementations. They asserted no training occurred on test sets and welcomed specific evidence of cheating.

- Open Source Setback? Llama 4 has very high running requirements (needing H100 GPUs), making it difficult to run even quantized versions on consumer GPUs. Furthermore, the new license restricts companies with over 700 million monthly active users, requiring special permission, raising questions about Meta’s commitment to open source.

- Trust Crisis: The incident sparked extensive negative discussion on platforms like Reddit. Llama enthusiasts expressed disappointment, jokingly suggesting a rename to “LocalGemma,” severely damaging Meta’s reputation within the open-source community.

Takeaways for Practitioners:

For AI professionals, the Llama 4 incident serves as a stark reminder: model evaluation shouldn’t solely rely on leaderboards. Community testing and real-world application feedback are the true tests of a model’s capabilities. Transparency and integrity in AI development are paramount; any perceived “shortcuts” can severely damage reputation.

For general users, it highlights the need for caution when evaluating AI tools, distinguishing marketing claims from actual performance. The event also reflects the intense competition and potential “traffic anxiety” in the AI field, which can lead to questionable decisions. Ultimately, solid technology and reliable performance, not brute force or potential manipulation, will win user trust and market share. The health of the open-source community depends on trust, openness, and genuine capability.

Access Method:

https://www.reddit.com/r/LocalLLaMA

PART 02: Programmer Productivity Could Increase 10x Within a Year, AI Agents Show Promise!

Highlights:

In recent interviews, OpenAI CEO Sam Altman shared his views on AI trends. He emphasized focusing on achieving a “10x increase in programmer productivity” within the next year or two, rather than aiming for fully automated programming. He sees AI as a crucial assistive tool in programming history, enabling developers to create more value efficiently. As software development efficiency increases, the market price of code might decrease, but demand will rise. Altman also expressed strong enthusiasm for AI Agents, envisioning a future where users give simple commands (like “add a new feature”), and the Agent handles the implementation. Addressing criticisms of AI applications being mere “wrappers,” Altman encouraged innovators to persist, believing that seemingly simple applications today could revolutionize the world tomorrow.

- Efficiency Surge: Predicts AI will boost programmer productivity by 10x within a year.

- Tool Empowerment: Stresses AI’s core value lies in assisting humans and enhancing creativity, not complete replacement.

- Code Economics: Analyzes how AI will lower code costs while stimulating greater software demand.

- Agent Vision: Describes a future where AI Agents automate development tasks based on user requests.

- Embrace Innovation: Encourages developers not to fear the “wrapper” label and to pursue early explorations that could lead to breakthroughs.

Takeaways for Practitioners:

For developers, Altman’s perspective signals a profound shift in work patterns, requiring proactive learning and utilization of AI tools to enhance competitiveness and focus on higher-level architectural design and innovation.

For users and businesses, the development of AI Agents promises to significantly lower the barriers to using and developing software, enabling easier customization of personalized features. Altman’s remarks also remind us to maintain an open mind towards emerging technologies, as early, seemingly simple innovations often hold immense disruptive potential.

Related Link 🔗:

https://www.youtube.com/watch?v=xFvlUVkMPJY

PART 03: Tongyi Open-Sources “Single Image to Person” Model: Generates Hyper-realistic 3D Digital Humans in Seconds

Highlights:

Alibaba’s Tongyi large model team announced on April 7th the open-sourcing of their latest “Large Avatar Model” (LAM) for creating hyper-realistic 3D digital humans. The breakthrough allows generating a high-quality, drivable, and expressive 3D digital head model from just a single 2D image within seconds. This is achieved through an innovative 2D-image-to-3D-Gaussian-splatting interactive Transformer architecture and a generalizable 3D Gaussian prior model trained on massive video datasets. Furthermore, Tongyi built a driving and rendering framework based on WebGL, enabling the generated 3D models to run in real-time (up to 120FPS on mobile) on virtually any device, including smartphones. The team also provides a complete real-time interactive conversation SDK, integrating LLM, ASR, and TTS, allowing users to easily have low-latency conversations with their “image avatars.”

- Single Image, Fast Generation: Input a photo, get an animatable, hyper-realistic 3D head in seconds.

- Innovative Architecture: Uses a 2D-3D interactive Transformer to learn a generalizable 3D Gaussian prior.

- Cross-Platform Efficiency: WebGL-based rendering ensures excellent performance, reaching 120FPS even on mobile.

- Real-Time Interaction: Offers a full SDK with LLM, speech recognition, and synthesis for low-latency dialogue.

- Fully Open Source: Technical report, GitHub code repository, and Hugging Face online demo are all publicly available.

Takeaways for Practitioners:

The open-sourcing of Tongyi’s LAM significantly lowers the technical barriers and costs associated with creating and deploying high-quality 3D digital humans.

For developers, content creators, and businesses, this provides a more accessible and efficient tool for applications in virtual customer service, online education, interactive entertainment, digital human live streaming, virtual companionship, and more, potentially spurring numerous innovative applications.

For general users, this paves the way for interacting with more realistic and naturally engaging virtual digital humans in the future, enriching digital life experiences.

Related Link 🔗: https://github.com/aigc3d/LAM

PART 04: GPT-5 Release Delayed! OpenAI CEO: o3/o4-mini Models Coming First

Highlights:

The highly anticipated next-generation flagship model from OpenAI, GPT-5, will not be released as soon as initially expected. CEO Sam Altman confirmed its launch has been pushed back by several months. There are three main reasons: first, integrating numerous new features effectively into a unified system proved more complex than anticipated; second, development revealed significantly greater performance improvements than initially projected, requiring more time for optimization and refinement; third, scaling and upgrading infrastructure is necessary to handle the potentially unprecedented demand anticipated for the new model. As an interim step and technical validation, OpenAI plans to release the o3 and o4-mini models in the coming weeks, originally intended as internal components of GPT-5. Reportedly, the o3 model has already demonstrated top-tier programmer-level coding abilities in internal tests. These transitional models will offer scalable architecture, multimodal capabilities, and improved cost-effectiveness.

- Release Postponed: GPT-5’s official debut is delayed by several months.

- Integration Challenges: Merging advanced features into a single model is more complex than expected.

- Performance Breakthrough: The model shows immense potential, exceeding performance expectations, necessitating more refinement time.

- Infrastructure First: Proactive infrastructure scaling is required to meet future demand.

- Intermediate Models: o3 and o4-mini models, featuring advanced capabilities, will be released soon as a bridge.

Takeaways for Practitioners:

GPT-5’s delay indicates that even industry leaders like OpenAI face significant engineering and technical hurdles when pushing the frontiers of AI. It reminds the industry that groundbreaking AI progress doesn’t happen overnight. While developers and users eager for GPT-5 need more patience, the upcoming o-series models might offer early glimpses of enhanced capabilities. This delay also provides a window of opportunity for competitors to catch up.

Related Link:

https://x.com/sama/status/1908167621624856998 (Note: This link points to a specific tweet by Sam Altman)

PART 05: OpenAI Updates: Deep Research Feature Opening to Free Users Amidst Legal Challenges

Highlights:

OpenAI has seen several recent developments. On the positive side, its powerful “Deep Research” feature, currently exclusive to paid subscribers, is being tested and is expected to become available to all free ChatGPT users soon. However, challenges arise on the legal front: in the copyright infringement lawsuit filed by The New York Times, a federal judge denied OpenAI’s motion to dismiss parts of the suit. The judge found that the evidence presented by the Times was sufficient to support claims that OpenAI likely knew its models could reproduce copyrighted content. Concurrently, new research suggests that OpenAI models, including GPT-4 and GPT-3.5, may have “memorized” substantial amounts of copyrighted material (like books and news articles) during training. Although OpenAI maintains its training practices fall under “fair use,” the court’s preliminary ruling and research findings increase its legal risks. Additionally, reports surfaced that OpenAI had discussed acquiring an AI device startup co-invested in by Jony Ive and Sam Altman.

- Feature Accessibility: Deep Research function soon available to free ChatGPT users.

- Litigation Setback: Court refuses to dismiss key claims in The New York Times lawsuit; copyright battle continues.

- Copyright Risk: Both judicial and research findings indicate potential for OpenAI models to “memorize” and reproduce copyrighted content.

- Fair Use Debate: OpenAI’s core defense of “fair use” faces significant challenges.

- Acquisition Rumors: Reports suggest OpenAI considered acquiring an AI hardware startup.

Takeaways for Practitioners:

Making the Deep Research feature available to free users is a significant benefit, enhancing ChatGPT’s information retrieval and analysis capabilities for a broader audience. However, the ongoing lawsuit with The New York Times and research on model memorization highlight the acute and complex nature of copyright issues surrounding AI training data. This serves as a warning not just for OpenAI but for the entire AI industry, potentially forcing a re-evaluation of data sourcing, training methodologies, and content generation mechanisms to balance innovation with copyright protection. Future legal rulings in this area will profoundly impact the trajectory of AI development.

PART 06: Anthropic Chief Scientist: Claude 4 Likely Within Six Months; AI Iteration Outpacing Hardware Cycles!

Highlights:

News from Anthropic, a major competitor to OpenAI: Chief Scientist Jared Kaplan recently revealed that the company’s highly anticipated next-generation large model, Claude 4, is expected to launch “in the next six months or so.” Kaplan also made a noteworthy observation: the development cycle in the AI field is rapidly shrinking, with model iteration speed now potentially exceeding the update cycle of the underlying hardware (like GPUs). He believes that algorithmic and engineering optimizations, such as post-training and reinforcement learning (RL), are significant drivers of this accelerated progress in AI, and there are currently no signs of this high-speed development slowing down.

- New Model Teaser: Anthropic’s next-gen model, Claude 4, expected within ~6 months.

- Compressed Cycles: AI software (model) iteration is outpacing hardware updates.

- Technology Driven: Post-training, RL, and other techniques are key engines accelerating AI development.

- Sustained High Speed: Technological progress in AI shows no signs of deceleration currently.

Takeaways for Practitioners:

Kaplan’s comments highlight a crucial trend in AI: software and algorithmic innovation are becoming the core drivers pushing the boundaries of AI capabilities, potentially outpacing hardware advancements governed by Moore’s Law. For practitioners, this means a constant need to stay updated on the latest algorithms, model architectures, and training methods. For the industry, it suggests that AI capabilities will improve even more rapidly, potentially leading to swift changes in the competitive landscape. Users can look forward to experiencing more powerful multimodal models from Anthropic in the near future.

Related Link:

https://x.com/vitrupo/status/1908763535351669017 (Note: Link points to a specific tweet discussing Kaplan’s comments)

PART 07: Mihoyo Founder Cai Haoyu’s New AI Game “Whispers From The Star” Gameplay Revealed

Highlights:

Anuttacon, the new company founded by Mihoyo’s Cai Haoyu, has unveiled gameplay footage of its first experimental AI game, “Whispers From The Star,” running on an iPhone. In the game, players interact immersively with the protagonist, Stella (nicknamed “Xiao Mei”), who has crash-landed on an alien planet. Communication happens via text, voice, video, etc., to help her survive and escape. The game’s defining feature is its AI-generated narrative, free from pre-set scripts. Player conversations and decisions directly impact Stella’s fate and can even foster subtle emotional connections (a demo showed Stella using “cheesy pick-up lines” that made the tester blush). The game is currently recruiting closed beta testers using iPhone 12 or newer models.

- AI-Driven Narrative: Core gameplay involves interacting with AI NPC Stella; plot dynamically generated, no fixed script.

- Immersive Interaction: Supports multiple communication methods (text, voice, video), includes emotional expression and real-time status displays (like heart rate, environmental temperature).

- Consequential Choices: Player decisions and dialogue directly determine the NPC’s survival and story progression, emphasizing player agency.

- Company Vision: Anuttacon aims to create personalized, immersive interactive entertainment, exploring new forms where games evolve with players. Its website now emphasizes “personalization” and “connection” over earlier “AGI” goals.

- Closed Beta Underway: Gameplay demo released; closed beta testing initiated for iPhone 12+ users. The team has grown to nearly 50 people, including industry veterans.

Takeaways for Practitioners:

For game developers, “Whispers From The Star” demonstrates the immense potential of AI in creating dynamic narratives, deep NPC interactions, and personalized emotional experiences. It could pave the way for new genres like “virtual companions” or emotionally driven games.

For players, this suggests future games might offer more realistic, immersive, and open-ended experiences, fostering deeper, more personalized relationships with virtual characters. This is a significant exploration of AI empowering the interactive entertainment industry, moving towards Anuttacon’s vision of “Gaming Is Life,” and signals that AI gaming could become the next major competitive frontier.

AI Tech News Daily Summary

The AI field is currently in a critical period of rapid iteration, filled with both opportunities and risks. From the accurate assessment of model performance and the ethical boundaries of innovative applications to the copyright compliance of training data and the long-term impact of artificial general intelligence, every aspect is fraught with variables and challenges, continuously shaping the future direction of technological development.

ZC Digitals

🚀 Leading enterprise digital transformation, shaping the future of industry together. We specialize in creating customized digital systems integrated with AI, enabling intelligent upgrades and deep integration of business processes. Leveraging our core team’s top-tier tech background from institutions like MIT and Microsoft, we help you build powerful AI-driven infrastructure to enhance efficiency, drive innovation, and achieve industry leadership.