Table of Contents

- 🤝 Google Joins Forces with Giants to Launch A2A Protocol, Ushering in a New Era of AI Agent Collaboration

- 💻 Google’s Project IDX Merges into Firebase Studio, Creating an Agent-Driven Development Platform

- 🤖 Samsung Partners with Google Gemini, AI Home Robot Ballie to Debut This Summer

- 🧠 OpenAI Fully Upgrades ChatGPT Memory, Altman Hints GPT-4.1/o3/o4-mini Are Coming Soon

- 💪 Google Releases Its Strongest AI Inference Chip Ironwood, Several AI Models Receive Major Updates

- 🔒 Breakthrough in Mobile AI Model Security: THEMIS Framework Achieves Efficient Watermarking Protection

🤝 Google Joins Forces with Giants to Launch A2A Protocol, Ushering in a New Era of AI Agent Collaboration

At its Cloud Next 2025 conference, Google officially launched the Agent2Agent (A2A) open interoperability protocol, aiming to break down barriers between different AI agents and enable secure, efficient cross-platform collaboration among agents built by different developers using various frameworks. The protocol has garnered support from over 50 industry giants, including Salesforce, SAP, and Atlassian, signaling the rapid formation of an interconnected, collaborative enterprise AI agent ecosystem.

Core Highlights

- Cross-Boundary Communication: A2A builds upon existing standards (like HTTP, SSE, JSON-RPC), providing a common framework for agents from diverse vendors and architectures to securely exchange info, discover capabilities, and coordinate actions, even without shared memory or tools. It natively supports multiple modalities like text, audio, and video.

- Protocol Complementarity: A2A complements, rather than replaces, Anthropic’s Model Context Protocol (MCP). Think of MCP as the “wrench” empowering a single agent, while A2A is the mechanism for multiple agents (a “mechanic team”) to collaborate on problem-solving, together building a more robust autonomous agent ecosystem.

- Ecosystem Co-Construction: Google collaborated with numerous tech partners and service providers on A2A’s design and implementation. Released under the Apache 2.0 license, its open, collaborative approach aims to establish it as an industry standard and foster a thriving ecosystem.

- Empowering Complexity: A2A excels in enterprise processes requiring multi-agent collaboration, like automated recruitment. Built-in features like capability discovery (Agent Card), task flow management, and UX negotiation significantly boost automation and efficiency for complex tasks.

Value Proposition

- For Professionals: Lowers the barrier to building complex AI applications; developers can easily integrate specialized agents from different vendors, reducing costs. Enterprises can connect siloed systems for deeper automation and efficiency. The open protocol will also spur new agent services and business models.

- For Ordinary People: Users may experience more seamless, intelligent services. For complex travel booking, interacting with one primary agent could trigger background collaboration among specialized agents via A2A, simplifying the process. Personal/work assistants will become more powerful, leveraging multiple agents for comprehensive, personalized services.

Related Links

- Google Developers Blog A2A Protocol Announcement

- Google Cloud Official Blog Announcement

- Techmeme Discussion

- In-depth Research Report on A2A Protocol (Example)

💻 Google’s Project IDX Merges into Firebase Studio, Creating an Agent-Driven Development Platform

Google announced the official merging of its cloud-based IDE, Project IDX, into Firebase Studio. The move aims to create a future-proof platform deeply integrated with AI agents, covering the entire application development lifecycle. This is seen as Google’s strategic play to compete with rivals like Replit and Cursor in AI-assisted development and to bolster the AI core competency of its Firebase ecosystem.

Core Highlights

- Strategic Integration: Combines Project IDX’s cloud IDE capabilities with Firebase’s backend services, hosting, testing, distribution, and AI frameworks like Genkit for a unified, seamless full-stack development experience.

- Agent Empowerment: The new Firebase Studio is fundamentally “Agentic.” Beyond Gemini-assisted coding, it introduces powerful AI agents that actively participate in development, like an “App Prototyping Agent” generating Next.js prototypes from natural language/sketches, and an “App Testing Agent” automating UI/functional test creation and execution without code.

- Market Competition: Facing AI-native competitors like Replit and Cursor, Google aims to offer a compelling alternative by integrating IDX and empowering Firebase Studio with strong agent capabilities, targeting developers seeking AI-driven efficiency and innovation.

- Full-Stack Efficiency: The goal is to provide Firebase and Google Cloud developers with an AI-powered, highly efficient solution covering front-end to back-end, from ideation to deployment, supporting various popular languages and frameworks.

Value Proposition

- For Professionals: Firebase/Google Cloud users gain unprecedented AI support. Agent-driven prototyping and automated testing promise to shorten development cycles and improve code quality. It signifies AI’s evolving role from passive assistant to active collaborator in software engineering, though deep integration might increase platform dependency.

- For Ordinary People: Smarter, more efficient development tools ultimately benefit end-users. Faster iterations mean quicker access to new features and improvements. AI-assisted automated testing helps build more stable, higher-quality apps, enhancing user experience.

Related Links

- Official Docs: Project IDX is now part of Firebase Studio

- Firebase Official Blog Cloud Next Announcement

- Reddit Discussion

- Google Developer Program Updates (Related)

🤖 Samsung Partners with Google Gemini, AI Home Robot Ballie to Debut This Summer

Samsung Electronics and Google Cloud announced an expanded partnership to bring Google’s Gemini AI large model to Samsung’s upcoming home AI companion robot, Ballie. The ball-shaped robot, often showcased at CES, is finally set for release in the US and South Korea in the summer of 2025. Powered by Gemini AI, Ballie aims to offer more natural conversational interactions and proactively assist with home management.

Core Highlights

- Dual AI Power: Ballie combines Google Gemini’s multimodal reasoning with Samsung’s own AI tech and language models. It processes voice, audio, visual (via camera), and sensor data for smarter, real-time responses.

- Diverse Functionality: The soccer-ball-sized robot rolls around the home, features projection capabilities, controls compatible smart devices (deeply integrated with SmartThings), executes voice commands (lights, reminders), and even offers suggestions (outfits, health) based on Gemini.

- Ecosystem Integration: Designed for deep integration with Samsung’s SmartThings network for seamless device control. Samsung plans third-party app support, potentially expanding its capabilities significantly.

- Market Entry: Ballie’s official launch marks Samsung’s significant step into the emerging AI consumer robotics field. Samsung aims to leverage its hardware prowess, user base, and AI partnership with Google to define the next-gen smart home robot standard.

Value Proposition

- For Professionals: Ballie’s launch creates new platform opportunities in robotics, smart homes, and AI app development, especially if third-party support materializes. The Samsung-Google partnership showcases how large tech companies can combine cutting-edge AI, hardware design, and mature ecosystems to pioneer new consumer categories. Ballie exemplifies multimodal AI application in consumer products.

- For Ordinary People: Signals the arrival of smarter, more proactive home service robots. Moving beyond stationary smart speakers, Ballie offers richer interaction as a mobile companion. It could become a more personalized home hub and assistant, enhancing convenience. However, its mobility, camera, and microphone raise privacy concerns that Samsung must address.

Related Links

- Samsung US Newsroom Announcement

- Samsung Global Newsroom Announcement

- Engadget Report

- Droid Life Report

🧠 OpenAI Fully Upgrades ChatGPT Memory, Altman Hints GPT-4.1/o3/o4-mini Are Coming Soon

OpenAI CEO Sam Altman announced a major upgrade to ChatGPT’s Memory feature; it can now reference all past conversations to provide more personalized and coherent service. Altman believes long-term user understanding is key. The feature rolls out first to Plus/Pro subscribers. Concurrently, Altman confirmed o3 and o4-mini models are coming soon, while reports suggest GPT-4.1 and its mini/nano versions might debut as early as next week.

Core Highlights

- Memory Evolution: The core upgrade expands memory scope. A new “Reference chat history” option lets ChatGPT automatically learn from all past dialogues, building a comprehensive user profile to understand preferences, style, and context for more natural interactions.

- New Model Signals: Altman’s teaser for o3/o4-mini, combined with media reports on the imminent GPT-4.1 series (main, mini, nano) and code evidence, strongly suggests OpenAI is preparing a suite of new models spanning flagship upgrades to cost-effective and specialized (e.g., reasoning) options.

- Personalization Push: Altman sees enhanced memory as key to deep AI personalization, envisioning AI that “knows you over your life.” This highlights OpenAI’s strategy to shift AI from a general tool to a personalized companion, focusing on long-term user relationships.

- User Control: Addressing privacy concerns, OpenAI emphasizes user control. Users can disable “Reference chat history” or turn off Memory entirely, tell/correct AI’s memory, and use “Temporary chat” mode which isn’t saved to memory.

- Industry Race: Enhanced memory and personalization are new battlegrounds for top AI firms. Google Gemini and Microsoft Copilot recently introduced similar features, indicating a consensus on using personalized experiences to boost user stickiness and differentiation.

Value Proposition

- For Professionals: Highlights personalization’s role in building competitive AI moats. AI that learns user preferences gains higher stickiness. Developers need to focus more on leveraging user data and long-term interaction value. Combined with lightweight models, this could enable personalized AI in more resource-constrained scenarios, while intensifying the challenge of balancing utility and privacy.

- For Ordinary People: A ChatGPT with better memory means smoother, more efficient interactions without repeating context or preferences. AI can offer more tailored help. However, more extensive personal data collection increases privacy risks, requiring users to manage settings carefully. Over-personalization might also lead to filter bubbles.

Related Links

- Sam Altman’s X Feed (Look for relevant posts)

- OpenAI Community Discussion on Memory Upgrade

- TechRadar Coverage

- The Hindu Coverage

- AIbase Report on New Models

💪 Google Releases Its Strongest AI Inference Chip Ironwood, Several AI Models Receive Major Updates

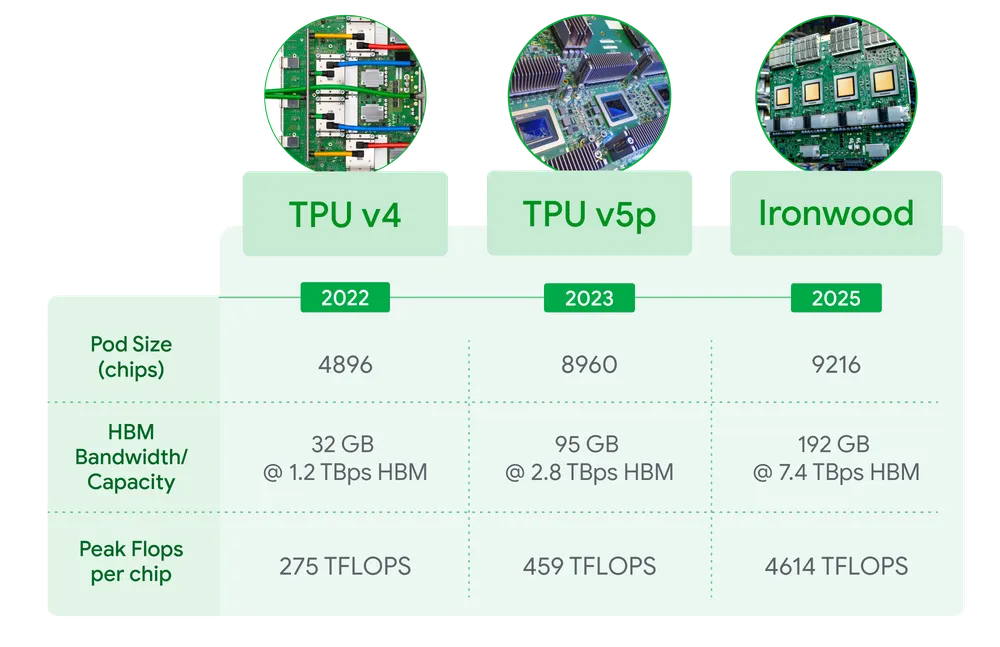

At Google Cloud Next 25, Google unveiled its 7th gen TPU “Ironwood” (TPU v7p), touted as its most powerful, scalable, and first explicitly inference-optimized TPU. It boasts significant performance and energy efficiency gains over predecessors. Google also announced major updates to its Vertex AI platform and key models like Veo 2 (video), Imagen 3 (image), Lyria (music), and Gemini Code Assist (coding).

Core Highlights

- Inference Beast: Ironwood is specifically optimized for AI inference, efficiently running complex “thinking models” like LLMs and MoEs. Inference performance is reportedly 3600x that of the first Cloud TPU, with 2x the performance/watt of the previous gen Trillium (TPU v6p), and supports the FP8 format.

- Hardware Muscle: Features 192GB HBM per chip (6x Trillium), 7.2 TBps HBM bandwidth (4.5x Trillium), and 1.2 Tbps ICI interconnect bandwidth (1.5x Trillium). Max cluster scales to 9,216 liquid-cooled chips, reaching 42.5 Exaflops peak FP8 compute. Likely uses chiplet design and integrates 3rd gen SparseCore.

- Omni-Modal Platform: Vertex AI adds the text-to-music model Lyria, becoming the only platform natively offering generative models across all major modalities (text, image, voice, video, music), providing comprehensive multimodal AI capabilities.

- Model Evolution: Veo 2 (video) gets editing features (inpainting, outpainting, composition guidance); Imagen 3 (image) enhances detail, lighting, and inpainting; Chirp 3 (speech) improves voice cloning, language support, and multi-speaker handling; Gemini Code Assist (coding) gains initial Agent capabilities for complex, multi-step tasks.

- Agent Synergy: Google reiterated the importance of the A2A protocol and showcased Gemini Code Assist’s Agent capabilities.

Value Proposition

- For Professionals: Ironwood’s inference performance and efficiency could significantly cut costs for large-scale AI deployment (esp. LLMs/MoEs), making complex apps more feasible. Vertex AI offers a compelling end-to-end solution (hardware to models) for Google Cloud users. Model updates provide powerful tools for creative, media, and other industries.

- For Ordinary People: Underlying AI capability improvements will eventually reach users via Google services and third-party apps. Expect faster, smarter search results, more natural voice interactions, and higher-quality AI-generated content (music, video, images), enriching digital life.

Related Links

- Google Official Blog: Introducing Ironwood

- The Next Platform Report

- Datanami Report

- TechNave Report (Vertex AI Updates)

- TechDogs Report (Cloud Next Overview)

🔒 Breakthrough in Mobile AI Model Security: THEMIS Framework Achieves Efficient Watermarking Protection

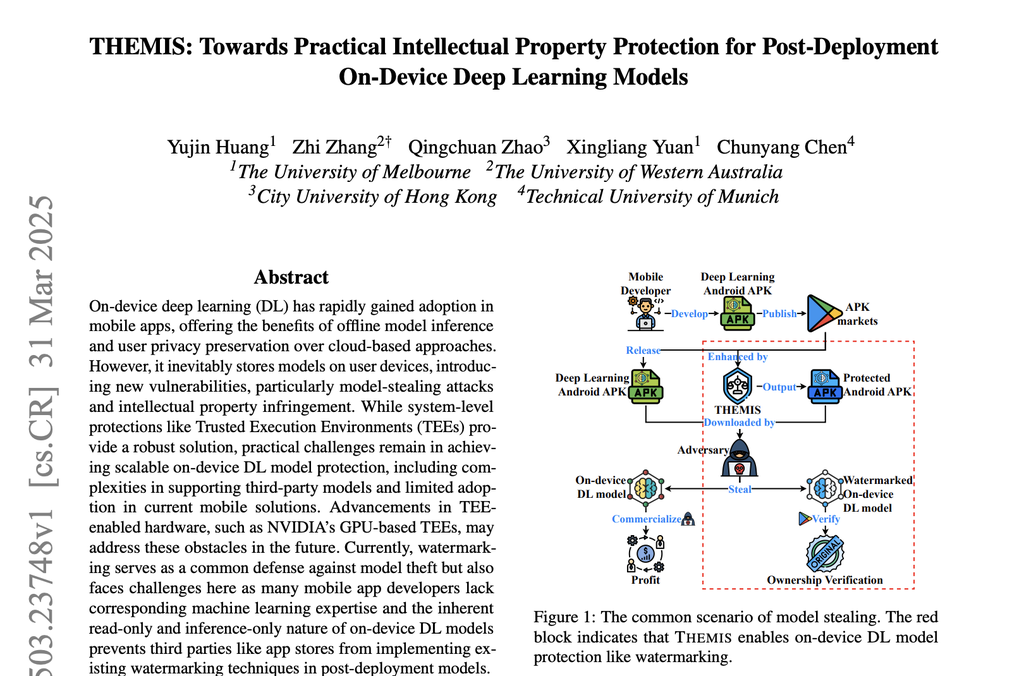

Addressing the risk of illicit extraction and theft of on-device AI models, a joint research team from several universities proposed the THEMIS framework – the first systematic solution designed specifically to protect the Intellectual Property (IP) of post-deployment mobile AI models. By embedding hard-to-remove digital watermarks, it helps developers track and prove ownership, effectively combating model theft. The research has been accepted by the top-tier security conference USENIX Security 2025.

Core Highlights

- Targeting Pain Points: THEMIS tackles three core challenges in mobile AI model protection: ① Encryption & Metadata Loss: Analyzes encrypted model behavior via dynamic execution tracing to recover missing metadata; ② Read-Only Limitation: Introduces innovative “Model Rooting” to parse model schema and generate code, rebuilding a writable copy; ③ Inference-Only Nature: Proposes the novel FFKEW training-free watermarking algorithm, embedding robust watermarks efficiently via a single forward pass.

- Innovative Solution: THEMIS is an automated toolchain integrating multiple novel methods: reverse engineering, runtime analysis, model format parsing/code generation, and the FFKEW algorithm, designed for ease of use.

- Proven Effectiveness: Tested on 403 real-world Android apps with local AI models from Google Play. Successfully watermarked models in 327 apps (81.14% success rate) with minimal impact on model accuracy (average drop < 2%), proving practical robustness.

- IP Guardian: Offers an automated, out-of-the-box solution for developers lacking extensive AI security resources to protect their core AI model assets from copying and misuse, safeguarding their IP and market competitiveness.

Value Proposition

- For Professionals: Provides an unprecedented, effective technical safeguard for companies deploying AI models on mobile (e.g., in healthcare, finance). Helps protect R&D investment, enforce IP, deter piracy, and secure business interests. The automated tool lowers the barrier to implementing advanced model protection. Long-term, effective IP protection incentivizes innovation and fosters a healthier mobile AI ecosystem.

- For Ordinary People: While users don’t directly see watermarks, a more secure mobile AI ecosystem benefits them. Protected developer IP encourages continuous R&D, leading to higher-quality, trustworthy apps. Such research also advances overall AI security technology, enhancing digital safety.