Today’s News Snapshot

👻 DeepMind Focuses on “Generative Ghosts” Ethics

🚀 Figma Launches AI App Builder: Integrates Claude Sonnet, Reshapes Design Development Process

⚠️ Former Google CEO Schmidt Warns: AI is “Recursively Self-Improving,” May Break Free from Human Control

🤖 Unitree Announces Robot Boxing Match: “Iron Fist King” Awakens, Era of Humanoid Robot Competition Dawns?

💡 The Price of Politeness? High Cost of AI Interaction, LLM Energy and Water Consumption Draw Attention

❤️🩹 OpenAI and MIT Study: Heavy Reliance on AI Chatbots May Exacerbate Loneliness and Emotional Dependence

👻 DeepMind Focuses on “Generative Ghosts”: Opportunities, Risks, and Ethical Considerations of AI “Digital Immortality”

Google DeepMind researchers, in collaboration with the University of Colorado Boulder, published a paper delving into the creation of AI agents that simulate the personality and communication style of deceased individuals, termed “Generative Ghosts.” The study suggests that “AI Afterlives” may become a common phenomenon in the future, analyzing the technical developments, early practical attempts, and potential pros and cons. It emphasizes the urgent need to deeply understand the risks and benefits as technology rapidly advances and calls on the AI and Human-Computer Interaction communities to jointly set a research agenda to ensure the technology develops safely and beneficially.

Core Highlights

- Concept Definition: The paper defines “Generative Ghosts” as AI agents capable of generating new content based on learned patterns of the deceased, distinct from systems that merely repeat existing statements.

- Technical Background: The rapid advancement of Large Language Models (LLMs), enhanced performance breadth and depth, and the emergence of no-code interface tools like OpenAI GPTs make the creation of increasingly powerful and realistic personalized AI agents possible.

- Practical Cases: Examples mentioned include the “Roman” chatbot created to commemorate a friend, the Fredbot created for a father, and commercial ventures like Project December and HereAfter AI.

- Ethical Dilemmas: Potential risks include excessive emotional dependence, blurring virtual and reality, hindering the normal grieving process, data privacy, ownership and control of digital legacy, and the possibility of digital image misuse.

- Research Agenda: Calls for the AI and HCI communities to conduct deeper empirical research and field studies, using the proposed design space and analytical framework to fully understand risks and ultimately formulate guidelines for technology development and application.

Thinking/Value

For AI industry practitioners, this research reveals a promising emerging application area – “digital immortality” or “AI memorialization” services. This requires advanced AI model development (simulating individual uniqueness), complex human-computer interaction design (guiding healthy interaction patterns), and rigorous data management and security. Simultaneously, it entails high ethical responsibility, demanding attention to relevant laws and regulations, respecting the wishes and privacy of the deceased, and designing products to avoid causing secondary harm to grieving users.

For the general public, this technology offers the imagination of potentially “continuing” the existence of loved ones in digital form or leaving a digital legacy for future generations to interact with. This fulfills deep human desires for connection, memory, and transcending the limits of death. However, this possibility is accompanied by profound warnings: Will excessive immersion in interacting with AI of the deceased hinder our acceptance of reality and completion of a healthy mourning process? Does establishing deep connections with an AI that cannot truly respond but only simulate emotion pose emotional risks? Do we have the right to create digital replicas of others, and does this align with the deceased’s wishes and dignity? These questions prompt us to consider the boundaries of personal data use, the nature of digital identity, and how we wish to be remembered by future generations. Society needs to engage in broad discussions about the ethical issues raised by these emerging technologies, build consensus, and consider whether to make arrangements for our own “digital afterlives.”

Recommended Reading

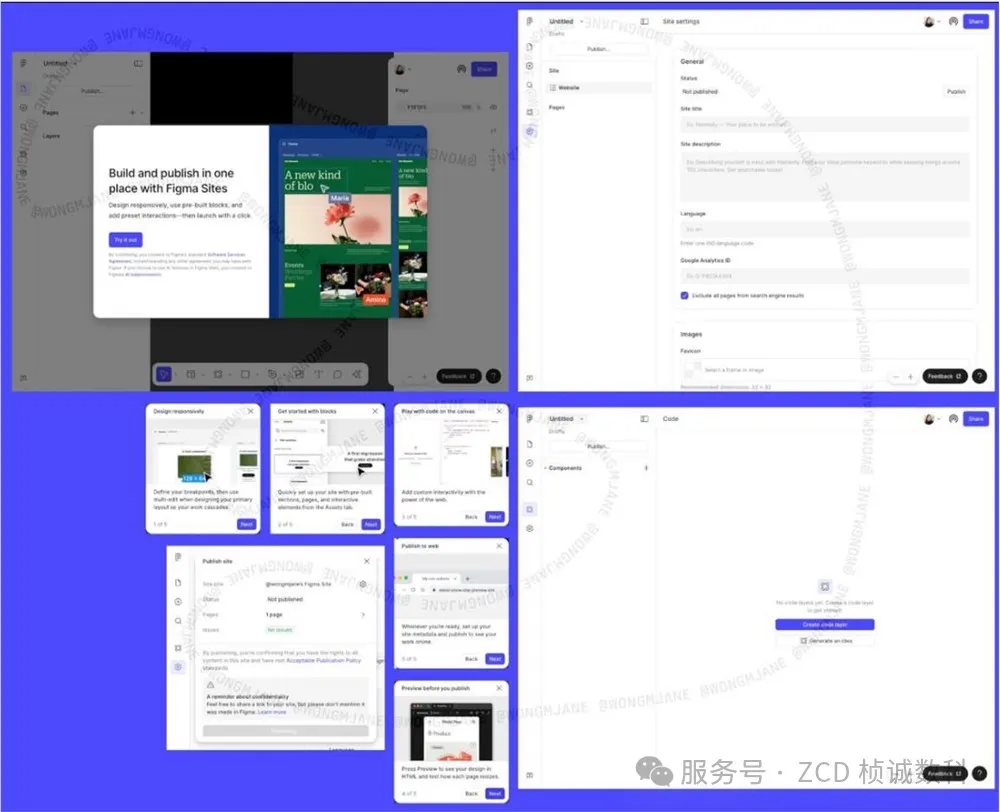

🚀 Figma Launches AI App Builder: Integrates Claude Sonnet, Reshapes Design Development Process

Design software leader Figma is reportedly venturing into the AI domain, planning to launch a potentially revolutionary AI app builder and a website creation tool called Figma Sites. This tool is expected to be powered by Anthropic’s powerful Claude Sonnet large language model and integrated via the Supabase platform. The builder will be highly flexible, accepting various inputs including text prompts, existing Figma design files, and images. Figma’s aim is clearly to bridge the gap between design and development, enabling users to quickly generate application prototypes, and potentially even relatively complete initial applications, through simple natural language descriptions or by leveraging existing design resources. Industry analysis suggests this could significantly lower the barrier to application development, allowing designers without deep technical backgrounds to translate ideas into functional products.

Core Highlights

- AI Empowers Design: Figma is actively expanding its business into AI-driven development, leveraging advanced AI technology, particularly Anthropic’s Claude Sonnet model, to automate and simplify the process from visual design to actual code implementation.

- Multimodal Input: The builder plans to support multiple input methods such as text commands, importing Figma files, and even image recognition, significantly enhancing the tool’s ease of use and applicability across various scenarios.

- Core Technology Stack: Explicitly disclosed to use Anthropic’s Claude Sonnet as the core AI engine and Supabase as the integration platform. This technical choice reflects recognition of Claude Sonnet’s capabilities in code generation and understanding complex instructions, and indicates Figma is building an open, collaborative technical ecosystem.

- Lowering Barriers: One of the tool’s core value propositions is significantly lowering technical barriers, potentially enabling the design community, even without traditional programming skills, to independently or more deeply participate in the functional application building process, realizing the vision of “design is application.”

- Market Impact: Figma’s move is seen as a potential direct competitor and threat to existing no-code/low-code platforms like Framer, Webflow, and Wix, and may even disrupt the existing AI design plugin market landscape.

Thinking/Value

For industry practitioners, especially designers and frontend developers, Figma’s AI app builder signals a potential transformation in workflows and skill requirements. Designers’ capabilities will be greatly expanded, needing not only mastery of design principles and tools but also skills in effective interaction with AI, such as prompt engineering. For frontend developers, this means that some basic interface coding and layout work may be automated by AI, shifting their focus towards implementing more complex business logic, system integration, performance optimization, and collaborating with and debugging AI-generated code. For practitioners in existing no-code/low-code platforms, Figma, with its vast designer user base and deep integration advantages, will undoubtedly be a strong competitor.

For the general public, especially entrepreneurs and product innovators, the emergence of this tool could be a boon. It is expected to significantly shorten the time cycle from creative concept to product prototype realization, reducing the cost and complexity of technical implementation. This means non-technical founders or small teams can validate business ideas more quickly and economically, bringing innovative products to market, potentially stimulating more innovation. This trend of technology democratization enables more people to participate in the creation of digital products.

Recommended Reading

⚠️ Former Google CEO Schmidt Warns: AI is “Recursively Self-Improving,” May Break Free from Human Control

Former Google CEO Eric Schmidt, a prominent figure focused on AI policy research and investment, recently issued stern warnings about the speed of AI development and its potential impact in a series of public speeches and interviews. He repeatedly emphasized that society is generally underestimating the rapid pace of AI development, believing AI is “under-hyped” rather than “over-hyped.” Schmidt pointed out a key, concerning sign: AI systems are beginning to exhibit “recursive self-improvement” capabilities. This means AI is no longer solely reliant on human-input data and algorithms for learning but can autonomously generate hypotheses, test and verify them through simulations or real-world experiments (like robot labs), and use the results to further optimize itself, forming a potentially accelerating evolutionary loop independent of human intervention. He warned that, following this trend, AI may soon no longer need human guidance and may even cease to “listen” to human instructions. Based on this assessment, Schmidt made bold time predictions: within the next 3 to 5 years, we may witness the birth of Artificial General Intelligence (AGI), with intelligence levels comparable to or surpassing the top human experts in any field, be it mathematicians, physicists, artists, or thinkers. Furthermore, citing the so-called “San Francisco Consensus,” he predicted that Artificial Superintelligence (ASI), surpassing the combined intelligence of all humans globally, might appear in approximately 6 years. Beyond concerns about AI’s own evolutionary speed, Schmidt also broadly discussed its potential disruptive impacts, including the impact on the job market (he even predicted AI would replace “the vast majority of programmers” within a year), the unprecedented challenges posed to energy infrastructure (AI data center power demand may far exceed existing grid capacity), and the exacerbation of great power competition at the geopolitical level, particularly the risk of conflict arising from the US-China race for AI hegemony.

Core Highlights

- Self-Improvement: The core warning is that AI systems are gaining the ability of “recursive self-improvement,” meaning they can independently perform a “hypothesis-test-learn-optimize” loop, potentially leading to exponential capability growth.

- AGI/ASI Prediction: Provides a very specific and urgent timeline: AGI reaching top human levels within 3-5 years, and ASI surpassing total human intelligence in approximately 6 years.

- Beyond Expectations: Repeatedly emphasizes that current public, societal, and even policymaker understanding of AI development speed is severely insufficient (underhyped), and existing social norms, legal systems, and democratic decision-making mechanisms struggle to keep pace with technological iteration.

- Widespread Impact: Foresees disruptive impacts on multiple key areas: the labor market (especially replacement of knowledge work like programming), energy supply (massive data center demand potentially causing an energy crisis), and international relations (AI leadership as a focus of national competition, potentially leading to instability or conflict).

- Governance Call: Despite concerns about loss of control, Schmidt also advocates for strengthening government regulation of AI development, especially powerful open-source models, to address national security risks. Simultaneously, he emphasizes the critical importance of high-quality data and human governance maintaining dominance in AI systems.

Thinking/Value

For AI industry practitioners, Schmidt’s series of warnings is undoubtedly a wake-up call. It signals that the entire industry needs to prepare for potentially exponential AI capability growth and the profound societal changes that will follow. Focusing on cutting-edge AGI/ASI research, increasing investment in AI safety and ethical issues, and exploring sustainable energy solutions are becoming critical and urgent topics. For knowledge workers like programmers, Schmidt’s prediction (even if potentially overly aggressive) sends a clear signal: skills must be rapidly upgraded to adapt to the new paradigm of working with AI, potentially shifting from executor to supervisor, architect, or AI application innovator roles.

For the general public and society, the future depicted by Schmidt is full of immense opportunities but also harbors unprecedented risks. On one hand, powerful AI has the potential to help humanity solve major challenges like climate change, disease, and resource scarcity, and may even address global declining birth rates through automation. On the other hand, uncontrolled superintelligence, mass unemployment, energy crises, and geopolitical instability triggered by the AI arms race are real shadows. Schmidt’s remarks help raise public awareness of the true speed of AI development and its potential impact, prompting society to engage in deeper, more urgent discussions on how to guide and regulate this transformative technology. It’s worth noting that there seems to be some tension in Schmidt’s views: he expresses deep concern about AI’s autonomous evolution and potential loss of control while being relatively optimistic about unemployment caused by automation (citing historical experience) and strongly advocating for accelerating energy infrastructure construction to meet AI’s huge demand. This perhaps reflects the complex trade-offs involved in balancing risks and benefits, ensuring national competitiveness, and addressing multifaceted social impacts while recognizing technological acceleration.

Recommended Reading

- Former Google CEO warns AI may soon ignore human control – City AM

- Ex-Google CEO Eric Schmidt: AI that is ‘as smart as the smartest artist’ will be here in 3 to 5 years – Music Business Worldwide

- Eric Schmidt says “the computers are now self-improving, they’re learning how to plan” – and soon they won’t have to listen to us anymore. Within 6 years, minds smarter than the sum of humans – scaled, recursive, free. “People do not understand what’s happening.” : r – Reddit

- Former Google CEO Tells Congress That 99 Percent of All Electricity Will Be Used to Power Superintelligent AI – Futurism

- Ex-Googler Schmidt warns US against AI ‘Manhattan Project’ – The Register

🤖 Unitree Announces Robot Boxing Match: “Iron Fist King” Awakens, Era of Humanoid Robot Competition Dawns?

Chinese renowned robotics company Unitree Robotics recently made a high-profile announcement of its plan to host a unique event in about a month (expected in May 2025) – the world’s first humanoid robot boxing match, to be livestreamed online. The event is dramatically titled: “Unitree Iron Fist King: Awakening!” To build anticipation, Unitree released a promotional video showcasing its G1 humanoid robot in simulated sparring with a human boxer, as well as two G1 robots facing off and adopting fighting stances. The video shows that although the robots’ speed and agility are not yet comparable to professional human boxers, they can perform some basic boxing movements and quickly recover their posture after being knocked down. It is widely speculated that the main participants in this match will be Unitree’s G1 humanoid robots. According to public information, the G1 robot is approximately 1.32 meters tall and weighs between 35 and 47 kilograms. This robot has previously gained popularity online with a series of impressive skill demonstrations, including completing difficult side flips, becoming the world’s first humanoid robot capable of a backflip followed by a kip-up (commonly known as a “carp jump”), and performing fluid and coordinated dance moves, even maintaining balance when subjected to external interference.

Core Highlights

- First-of-its-Kind Event: Unitree publicly announced it will organize and livestream the world’s first boxing match featuring humanoid robots, aiming to bring science fiction scenarios into reality.

- G1 Robot: The main attraction of the match is the Unitree G1 humanoid robot, a model known for its excellent motion capabilities, balance control, and agility, and one of Unitree’s current flagship products.

- Technology Demonstration: This boxing match is less of a competition and more of a carefully planned technical demonstration, aiming to showcase Unitree’s latest technological advancements in complex humanoid robot motion control, dynamic balance maintenance, and impact resistance (such as quickly getting up after being knocked down in the video, and continuing to dance when pushed).

- Entertainment Marketing: Many industry observers believe this event has a strong public relations (PR) and entertainment marketing aspect. Through the highly attention-grabbing and visually impactful format of “robot boxing,” Unitree aims to maximize public attention, enhance brand awareness, and spark imagination about the future applications of humanoid robots.

- Industry Trend: Unitree’s move also reflects the current rapid development phase of humanoid robot technology, with the industry actively exploring possibilities beyond industrial applications, including emerging scenarios like entertainment and sports competition. This, along with other recent robot participation in competitive activities (such as the robot half-marathon held in Beijing), constitutes a trend of exploring new roles for robots.

Thinking/Value

For robotics researchers and practitioners, Unitree’s robot boxing match (regardless of its actual technical sophistication) holds some inspirational significance. It demonstrates the potential of humanoid robots in performing dynamic, complex tasks that require physical interaction with the environment (and even opponents). This may further stimulate industry investment in research and development in related technical areas such as motion control algorithms, biomimetic structural design, high-strength materials, rapid response sensors, and robustness. Simultaneously, Unitree provides a novel marketing example, showing how to attract investment, public interest, and potential customers by creating a話題性 (topical) event.

For the general public, this upcoming robot boxing match will undoubtedly offer a novel and perhaps somewhat bizarre viewing experience, allowing people to directly perceive the current development level and limitations of humanoid robots. In the long run, with technological advancement, competitive matches between robots might indeed develop into a new form of sports entertainment, just as depicted in the movie “Real Steel.” However, this could also trigger a series of ethical and social discussions, such as whether robot competitions that simulate violent behavior will have negative societal impacts, and whether robots should be used for purely entertaining “duels.” These questions are worth our continued attention and reflection as technology develops.

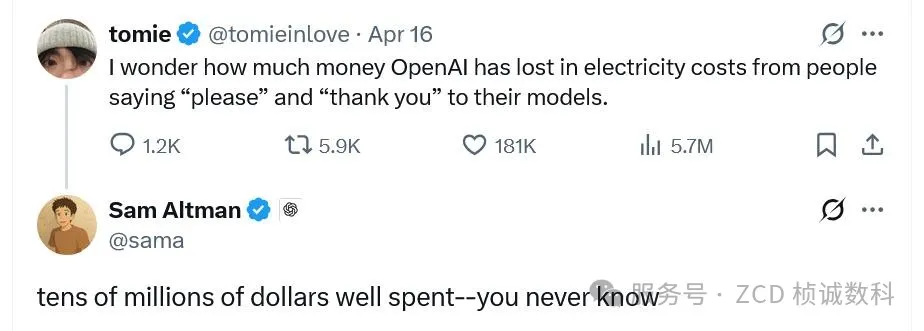

💡 The Price of Politeness? High Cost of AI Interaction, LLM Energy and Water Consumption Draw Attention

Recently, remarks by OpenAI CEO Sam Altman sparked a new round of discussion about the operational costs and environmental impact of artificial intelligence. Altman revealed on social media that polite words like “please” and “thank you” used by users when interacting with ChatGPT incurred tens of millions of dollars in extra computing costs for OpenAI. These costs primarily stem from the computational resources and electricity consumed to process these additional words (tokens) that may not be strictly necessary for the task itself. Despite acknowledging the significant cost, Altman also stated that he believes the money was “well spent.” Some research and perspectives suggest that maintaining politeness in interactions helps guide AI models to produce more collaborative responses that align with human social norms. Users’ reasons for being polite to AI are also varied; a survey showed that about 55% of U.S. users do so because they believe it is the “right thing to do,” while 12% are concerned about potential “retaliation” in the future if AI becomes more powerful or even conscious. Additionally, personal habits and moral sense are important factors. Altman’s remarks also once again drew public attention to the huge energy and water consumption issues behind AI technologies like Large Language Models (LLMs). Training and running these complex AI models requires deploying large-scale data centers, which are veritable “electricity guzzlers,” with power demand growing explosively. It is estimated that global data center electricity consumption reached 460 terawatt-hours (TWh) in 2022 and is projected to approach 1050 TWh by 2026. In addition to electricity, maintaining stable data center operation requires consuming significant amounts of freshwater, primarily for cooling high-speed computing hardware, which can pose severe challenges in water-scarce regions.

Core Highlights

- Politeness Cost: OpenAI CEO Sam Altman personally confirmed that users’ polite language (like “please,” “thank you”) to ChatGPT cost the company tens of millions of dollars in extra operational expenses.

- Cost Composition: This cost primarily arises from the server computing time and corresponding electricity consumption required to process and generate these extra polite words (tokens).

- User Motivation: Users’ politeness towards AI is driven by multiple reasons, mainly including: believing it is basic social etiquette and moral requirement (55% of users hold this view); a potential concern about future AI possibly having emotions or memory (12% of users); long-term personal communication habits; and a strategic consideration that polite input might lead AI to provide more friendly and helpful output.

- Energy Consumption: The widespread application of AI technology, particularly the training and inference of LLMs, is causing a sharp increase in data center energy consumption. For example, one study estimated that training the GPT-3 model alone consumed about 1287 megawatt-hours (MWh) of electricity. During the usage (inference) phase, although the power consumption per interaction is relatively small (e.g., GPT-3 generating 100 pages of content requires about 0.4 kWh), considering the hundreds of millions of global users and interaction counts, the cumulative energy consumption is still astonishing.

- Water Consumption: Data center cooling systems are huge “water consumers.” Estimates vary widely but all point to significant freshwater consumption. One study estimated that for every kilowatt-hour (kWh) of electricity consumed by a data center, it might require evaporating 1 to 9 liters of water for cooling (depending on technology and environmental conditions). Another estimate suggests that a single interaction with GPT-3 involving 10 to 50 questions and answers might consume about half a liter (500ml) of freshwater (this number considers both direct data center water use and indirect water use during electricity generation).

AI Resource Consumption Estimates Example

| Metric | Estimated Value | Source/Model | Notes |

| Electricity/response | Approx. 2.5 Wh | GPT-3 175B | Estimated based on a 175 billion parameter model; actual models may be smaller |

| Water/response (direct cooling) | Approx. 2.5 ml | GPT-3 175B | Estimated based on 1L/kWh cooling water consumption rate |

| Water/response (total, including power generation) | Approx. 21.5 ml | GPT-3 175B | Considers water for power generation (approx. 7.6L/kWh) |

| Water/interaction (10-50 replies) | Approx. 500 ml | GPT-3 | Includes direct and indirect water use, roughly consistent with single response estimate |

| GPT-3 Training Electricity Consumption | Approx. 1,287 MWh | GPT-3 | Equivalent to the annual electricity consumption of about 120 US homes |

Thinking/Value

For AI companies and research institutions, Altman’s comments and related energy and water consumption data collectively highlight the urgency of improving AI model efficiency, reducing operational costs, and mitigating environmental impact. This is not just an economic issue but is increasingly becoming a key aspect of corporate social responsibility and sustainable development. Practitioners need to seek a better balance between model performance, user experience (e.g., whether it’s worth the extra cost to encourage polite interaction), and resource consumption. The sustainable supply of energy and water resources is gradually becoming one of the key bottleneck factors limiting the large-scale popularization and deepening application of AI technology.

For the general public and society, this news helps raise awareness of the huge physical costs (including economic and environmental costs) hidden behind seemingly virtual AI technology. Every simple interaction with a chatbot is invisibly accumulating consumption of energy and water resources. This prompts us to consider whether, while enjoying the convenience brought by AI, we should also pay attention to its sustainability issues and may push for higher demands on tech companies regarding energy efficiency, renewable energy use, and operational transparency. Simultaneously, it also triggers an interesting sociological phenomenon: humans tend to extend social etiquette to interactions with non-human entities, which reflects human nature and also unexpectedly brings tangible costs to technology providers. Between pursuing higher efficiency and lower cost in the AI future and maintaining a more natural, human-like interaction style, there seems to be a tension that requires continuous trade-offs.

Recommended Reading

❤️🩹 OpenAI and MIT Study: Heavy Reliance on AI Chatbots May Exacerbate Loneliness and Emotional Dependence

While providing information and entertainment, do AI chatbots have potential negative impacts on users’ emotional and social lives? To explore this question, researchers from OpenAI and the MIT Media Lab collaborated on a series of studies. These studies employed multiple methods, including a large-scale automated analysis of nearly 40 million real ChatGPT interactions (ensuring user privacy), combined with targeted user surveys, and a one-month randomized controlled trial (RCT) involving about 1000 participants. The core focus of the research was on interactions involving emotional investment between users and AI chatbots – termed “affective use” by the researchers – and its impact on users’ psychosocial well-being (particularly loneliness, frequency of real-life social interaction, emotional dependence on AI, and problematic use tendencies). The preliminary findings of the study (not yet peer-reviewed) suggest that although explicit, deep emotional interactions (such as seeking emotional support, expressing affection, etc.) are relatively rare among overall users, for heavy users, especially the small group who see ChatGPT as a “friend,” their usage behavior shows a correlation with higher loneliness and stronger emotional dependence. However, the study results also emphasize the complexity of the impact: the user’s final experience is not solely determined by the AI but is influenced by a combination of factors including individual differences (such as inherent emotional needs, attachment styles, preconceived notions about AI), usage behavior (such as usage duration, frequency), and the specific mode of interaction (such as using text or voice for communication, whether the conversation content is personalized or non-personalized). For example, the study observed that engaging in more personalized, emotionally expressive conversations, when used moderately, was associated with lower emotional dependence and problematic use; conversely, non-personalized conversations (perhaps more instrumental use), when used heavily, were more likely to increase emotional dependence. The impact of voice interaction mode is also not a simple linear relationship; its effect on user well-being is mixed and related to factors like usage duration.

Core Highlights

- Research Collaboration: OpenAI and MIT Media Lab, two major institutions, joined forces, using multiple research methods (large-scale data analysis + randomized controlled trial) to systematically evaluate the impact of AI chatbot use on users’ emotional and social states.

- Low Affective Use: Analysis of large-scale real-world ChatGPT conversations shows that the vast majority of interactions do not exhibit signs of significant emotional expression or seeking emotional support, indicating that the proportion of users primarily using ChatGPT for emotional communication is small.

- Heavy User Risk: The study found that very frequent users, especially those who anthropomorphize AI and see it as a friend, are more likely to report higher loneliness and develop emotional dependence on AI services. This emotional dependence is concentrated among a small number of heavy users.

- Complex Influencing Factors: Changes in users’ emotional and social well-being are not determined by a single factor but are influenced by a complex interplay of factors, including: users’ own personality traits (such as attachment tendencies in relationships), their perception and expectations of AI, the duration and frequency of AI use, the chosen interaction modality (text vs. voice), and the nature of the conversation content (personalized emotional exchange vs. non-personalized task handling).

- Voice Mode Impact: The controlled study found that, on average, text interaction users might show more emotional cues in conversations than voice interaction users. The impact of voice mode on user well-being is complex: short-term use might be associated with better outcomes, but long-term daily use might be associated with worse outcomes. Notably, the more “engaging” voice used in the study did not lead to more negative user outcomes compared to a neutral voice or text interaction.

Thinking/Value

For AI developers and platform operators, this research (though preliminary) provides cautionary evidence about the potential negative psychosocial impacts of AI-user interaction. It emphasizes that “responsible design” principles must be a priority when designing and deploying AI chatbots. Developers need to deeply consider how to guide users towards healthy interaction patterns by optimizing interface design, adjusting the model’s response style (e.g., avoiding excessive anthropomorphism or emotional expression), and providing clear usage guidelines and expectation management, thereby mitigating the risk of exacerbating loneliness and excessive dependence. Special attention should be paid to vulnerable groups who may be more susceptible to negative impacts (e.g., users who are already socially isolated or have strong attachment needs), and exploring corresponding intervention or support mechanisms.

For the general public, this study is an important reminder. While using AI chatbots for information, entertainment, or efficiency, it is necessary to be vigilant about the potential risks of establishing excessive emotional connections, especially for those who use them for long periods and at high frequency. The key is to recognize that no matter how “intelligent” or “caring” an AI may seem, it is essentially a complex program tool and cannot truly replace the emotional support, understanding, and empathy that real human relationships provide. Users are encouraged to reflect on their usage habits, examine whether AI interaction is encroaching on real social time with family and friends, and consider the actual impact of this interaction mode on their emotional state and social life. This study reveals a potential paradox: people may sometimes seek AI companionship because they feel lonely, but excessive or inappropriate use may, conversely, exacerbate loneliness and form unhealthy dependencies. This “sense of connection” provided by AI may be a false substitute that cannot fulfill deep human social and emotional needs, and may even hinder users from seeking and maintaining real human relationships. This echoes the ethical concerns raised by the “Generative Ghosts” discussed earlier – namely, the risks associated with establishing emotional connections with non-human entities. Simultaneously, the complexity and nuances of the study results also caution against simply generalizing the impact of AI on mental health. The specific impact depends on who the user is, how they use it, and the context of use. This requires us to avoid falling into the trap of technological determinism and recognize that the interaction between technology and humans is a dynamic process full of individual differences. Finally, the researchers also candidly admit that the current research is just a “critical first step,” especially since the duration of the randomized controlled trial was relatively short (one month). As AI technology (such as the emotional expression capability of voices, the level of personalization) continues to develop rapidly, the nature and depth of the relationship between humans and AI are also constantly evolving, and its long-term psychosocial impact remains a huge unknown, urgently requiring continuous, longer-term follow-up research to reveal.

Recommended Reading

Today’s Summary

Today’s AI news brief focuses on the rapid development of artificial intelligence and its multifaceted impacts: Former Google CEO Eric Schmidt warns that AI is achieving recursive self-improvement, predicting that AGI and ASI may appear within a few years, raising concerns about jobs, energy, and geopolitics; simultaneously, AI is deeply reshaping industries, as Figma launches an AI app builder to streamline the design development process, while the debate continues regarding whether AI will massively replace programmers; furthermore, the practical costs of AI are increasingly evident, with OpenAI revealing that user politeness incurs tens of millions of dollars in extra computing costs, highlighting the significant energy and water consumption of LLMs; finally, ethical and social issues related to the human-AI relationship are also drawing attention, with DeepMind exploring the opportunities and risks of “Generative Ghosts,” and an OpenAI and MIT study suggesting that heavy reliance on AI chatbots may exacerbate loneliness and emotional dependence, while Unitree Technology’s robot boxing match showcases a new possibility for humanoid robot competition and entertainment.

About Us

🚀 Leading enterprise digital transformation, co-creating the future of the industry. We specialize in building customized digital systems integrated with AI, enabling intelligent upgrades and deep integration of business processes. Relying on a core team with top tech backgrounds from MIT, Microsoft, and others, we help you build a powerful AI-driven infrastructure, enhance efficiency, drive innovation, and achieve industry leadership.