Today’s News Highlights

🤖 DeepSeek Releases Prover-V2: Further Evolution of AI in Mathematical Proof

🐾 Traini Pet Translator: Can AI Understand “Woof-Woof” Language?

📄 PaperCoder Emerges: AI Automatically Reproduces Top Conference Paper Code

🎨 Doubao Super Creative 1.0: Comprehensive Upgrade of AI Drawing Functionality

01🤖 DeepSeek Releases Prover-V2: Further Evolution of AI in Mathematical Proof

Artificial intelligence startup DeepSeek quietly released its latest specialized large model series, DeepSeek-Prover-V2, on the Hugging Face community. This series focuses on theorem proving in the mathematical domain, particularly within the Lean 4 formal environment. This release not only continues DeepSeek’s open-source approach, introducing two versions with 7B and a massive 671B parameters, but also garnered widespread industry attention due to its innovative “cold start” training method and top-tier performance on mathematical benchmarks. This showcases its strong capabilities and ambition in the field of automated mathematical reasoning.

Key Highlights

- Dual Model Release: Introduced DeepSeek-Prover-V2-7B (6.91 billion parameters, 32K context) and DeepSeek-Prover-V2-671B (671 billion parameters, 164K context, speculated to use MoE architecture) models, balancing efficiency and performance.

- Focus on Mathematical Proof: The models are specifically focused on formal mathematics, aiming to enhance AI’s rigorous mathematical reasoning, logical deduction, and automated theorem proving abilities within the Lean 4 environment.

- Innovative Training: Employs a “cold start” strategy, utilizing a large model (V3) to decompose theorems and generate high-level proofs, a smaller model (7B) for searching, and finally optimizing through reinforcement learning from human feedback (RLHF) to transform informal ideas into formal proofs.

- Leading Performance: The 671B model achieved an 88.9% pass rate on the MiniF2F-test benchmark and solved 49 problems on the PutnamBench dataset, demonstrating the current state-of-the-art level.

- Open-Source Benchmark: Not only open-sourced the model weights and code but also introduced a new benchmark, ProverBench, containing 325 mathematical problems covering various fields and difficulties from competitions to textbooks.

Value Insights

- For Practitioners:

- Provides powerful open-source mathematical reasoning tools, lowering the barrier to entry for automated theorem proving research.

- Its hybrid training paradigm of “large model planning + small model execution + reinforcement learning optimization” offers significant reference value for solving data-sparse specialized domain problems (such as code generation and legal analysis).

- ProverBench provides a new standard for evaluating mathematical reasoning capabilities.

- Caution: Early feedback suggests that high specialization may sacrifice general capabilities, requiring attention to the trade-off between specialization and generalization.

- For the General Public:

- Has little direct impact in the short term, primarily applied in scientific research and education.

- In the long term, promoting the development of basic science may indirectly lead to more advanced technologies.

- DeepSeek is building an ecosystem through a combination of “model + code + benchmark,” which may lead to the emergence of mathematics tutoring tools for students in the future.

Recommended Reading

- [DeepSeek-Prover-V2-671B (Hugging Face)]

- [DeepSeek-Prover-V2-7B (Hugging Face)]

02🐾 Traini Pet Translator: Can AI Understand “Woof-Woof” Language?

A pet language translation software called Traini has recently become popular. Based on its self-developed “Pet Emotion and Behavior Intelligence System” (PEBI) and “Semantic Space Theory,” it claims to identify 12 emotions in dogs by analyzing millions of dog behavior data points. This application not only attempts to “translate” pet behavior to owners but also tries to “translate” owner’s words into dog barks, aiming to use AI to break down communication barriers between humans and pets and explore new possibilities for interspecies communication.

Key Highlights

- Emotion Decoding: The core PEBI system, based on “Semantic Space Theory” and over a million dog behavior data points, claims to identify 12 subtle emotions such as happiness and fear.

- Multi-modal Interaction: Users can upload photos, videos, and sounds for AI analysis to “translate” pet behavior; it also offers an experimental “human voice to dog bark” function, attempting two-way communication (claimed accuracy of 81.5% for dog behavior to human language).

- Technical Support: Integrates the Transformer architecture for processing behavior sequences and K-nearest neighbor voice conversion (kNN-VC) technology for analyzing barks, enabling multi-modal analysis.

- Ecosystem Expansion: Includes a built-in PetGPT question-answering robot, over 200 training courses, and behavior tracking features; plans to launch smart collars and AI companions to promote pet physical and mental health.

Value Insights

- For Practitioners:

- Demonstrates a new direction for AI applications: human-animal interaction.

- The PEBI system and Semantic Space Theory provide case studies for affective computing and animal behavior research.

- Its “software + user data + hardware ecosystem” business model (optimizing models through user-uploaded data and then launching hardware to lock in users) offers valuable insights, especially for building barriers in vertical fields.

- For the General Public:

- Meets the needs of owners to understand their beloved pets and strengthen emotional connections, providing emotional comfort and entertainment.

- Integrates practical features such as PetGPT and training courses, addressing real-world pet ownership issues.

- Note: The scientific accuracy of its “translation” still needs verification; its entertainment value may outweigh its scientific value.

- If the health monitoring function matures in the future, it may become a practical pet health early warning tool.

Recommended Reading

- [Traini Official Website]

- [Business Wire Report on Traini’s Launch]

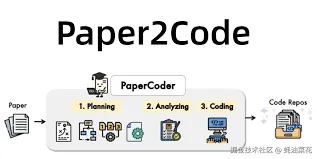

03📄 PaperCoder Emerges: AI Automatically Reproduces Top Conference Paper Code

A research team from the Korea Advanced Institute of Science and Technology (KAIST) has released and open-sourced an innovative AI system called PaperCoder. This system directly addresses the time-consuming and laborious pain point of reproducing algorithms from machine learning papers. It employs a multi-agent framework based on large language models (LLMs) to simulate the human development process (planning, analysis, generation), automatically transforming academic papers into high-quality, runnable codebases. Initial evaluations show high code quality, even gaining recognition from most original authors.

Key Highlights

- Addresses Pain Point: Aims to automate the code implementation of paper algorithms, solving the reproducibility problem in research and significantly shortening the cycle from theory to practice.

- Multi-agent Collaboration: Adopts a multi-agent framework with specialized agents responsible for planning (architecture design, configuration files), analysis (interpreting implementation details), and generation (writing modular code), mimicking the software development process.

- Effectiveness Verification: Outperforms existing methods on the Paper2Code benchmark; more importantly, 77% of the original paper authors considered its generated codebase the best implementation, and 85% found it helpful.

- Open-Source Sharing: The project code has been fully open-sourced on GitHub, facilitating global researchers to use, improve, and contribute, collectively promoting technological development.

Value Insights

- For Practitioners (Researchers/Developers):

- Significantly improves research efficiency, accelerates the speed of verifying new ideas and iterations, and has the potential to drive overall innovation in the field.

- Lowers the technical barrier to understanding and implementing cutting-edge complex algorithms.

- Its structured, phased multi-agent collaboration method provides a successful paradigm for solving other complex automation tasks (such as automated software development and scientific experiment design).

- Implication: Even with powerful AI capabilities, verification and supervision by human experts (such as the original authors) remain crucial in rigorous research fields, necessitating the establishment of effective human-machine collaboration mechanisms.

- For the General Public:

- The impact is indirect but profound. By accelerating the research process in AI and related fields, it helps to translate advanced technologies into practical applications that improve lives more quickly.

Recommended Reading

- [PaperCoder GitHub Repository]

04🎨 Doubao Super Creative 1.0: Comprehensive Upgrade of AI Drawing Functionality

ByteDance’s AI assistant “Doubao” recently underwent a significant “Super Creative 1.0” update to its web/PC AI drawing tool. This upgrade focuses on enhancing the user experience and efficiency of AI image creation, optimizing around five core capabilities: “Understanding You,” “Co-creation,” “Super Efficiency,” “Thousands of Styles,” and “Adaptability.” The aim is to enable AI to understand user intentions more accurately, support inspiration iteration, achieve batch generation, provide a rich variety of styles, and adapt to different aspect ratios.

Key Highlights

- Understanding Your Intent: Significantly improves AI’s ability to understand and execute complex and detailed creative instructions (such as Q-style inflatable, color, composition, and proportions).

- Co-creation Iteration: Optimizes the collaboration process between AI and users, making inspiration generation, image creation, and adjustment more seamless, facilitating collaborative creation.

- Efficiency Revolution: Introduces a “batch generation” function, combined with underlying model inference speed optimization, significantly improving image output efficiency.

- Thousands of Styles: Leveraging the accumulation of ByteDance’s visual models (such as Seedream), it offers a rich selection of artistic styles and powerful style transfer capabilities.

- Flexible Adaptation: Optimizes adaptability to different aspect ratios (such as 1:1), enabling generated content to better meet the needs of various publishing platforms or application scenarios.

Researcher Thoughts

- For Practitioners (Designers/Creative Workers):

- Demonstrates how to productize underlying AI capabilities (such as visual models) into practical tools for specific workflows, lowering the barrier to AI usage.

- Features like batch generation, aspect ratio adaptation, and precise understanding directly address efficiency and control needs in creative production, making AI drawing tools closer to professional productivity tools.

- Can serve as a source of inspiration or a tool for quickly creating prototypes and materials, potentially changing some design work models.

- For the General Public:

- Further lowers the barrier to creative design, allowing individuals without professional skills to create personalized images using Doubao for social media, personal project beautification, etc.

- Enhances the appeal of Doubao as a one-stop AI assistant platform, increasing user stickiness.

Recommended Reading

- [Introduction to ByteDance Seedream Visual Technology]

Today’s Summary

- Deepening of Foundational Models: Represented by DeepSeek Prover-V2, large models are advancing in-depth towards solving specific high-difficulty cognitive tasks (such as mathematical proof), continuously challenging the boundaries of AI capabilities.

- Automation of Complex Tasks: The success of PaperCoder validates the potential of multi-agent collaborative systems in handling complex processes (such as paper code reproduction), indicating that AI will play a greater role in automating scientific research, software engineering, and other fields.

- Optimization of Application Experience: As shown by the Doubao “Super Creative 1.0” update, AI applications are increasingly focusing on integration with specific workflows, transforming technology into practical productivity tools by improving intent understanding, efficiency, and controllability.

- Innovation in Scenario Expansion: AI’s reach is extending to more segmented and lifestyle-oriented scenarios. For example, Traini attempts to interpret pet emotions, exploring new possibilities for interaction between humans and non-human intelligent agents and meeting users’ emotional needs.

- Parallel Development of Ecosystems: Open-source (DeepSeek, PaperCoder) and closed-source (ByteDance Doubao) ecosystems continue to develop in parallel. The former provides research foundations and tools, while the latter promotes commercialization, jointly accelerating the rapid iteration and application popularization of AI technology.

Overall, AI is accelerating its transition from demonstrating general capabilities to a new stage of solving practical problems in specific fields, improving production efficiency, and creating novel experiences.